November 14, 2016 Opinion No comments

Single Git Repository for Microservices

Just recently I joined a team. We write intranet web application. There is nothing too special about it, except that it was designed to be implemented as micro-services and as de-facto at the moment it is a classical single .NET MVC application. This happened for a simple reason: meeting first release deadline.

The design was reflected in how source control was set up: one git repository per each service. Unfortunately this caused a number of required maneuvers to be in synch and to push changes as team was making scattering changes in multiple repositories. This also made it more difficult to consolidate NuGet packages and other dependencies as all of them were in different repositories.

I think that microservices and corresponding hard reflection of their boundaries in form of source code repositories should evolve naturally. Starting with a single repository sounds more reasonable. If you keep the idea of microservices in you head and nicely decouple your code nothing stops you creating new repositories as you service boundaries start to make shape.

Taking this into account we merged repositories into one. There was only question of keeping source code history. Turns out the history can be easily preserved by employing git subtree command and placing all of the service repositories as subdirectories of a new single repository.

As a result, team is working much more effectively as we do not waste time on routine synch and checking who did what where.

Conclusion: Theoretically micro-services should be implemented in their own repositories. That’s true, but in practice for relatively small and new project, with only one team working on it, single repository wins.

Running Challenge Completed. Health, Motivation, and Other Aspects Of Running

October 2, 2016 Opinion No comments

Are you bent by scoliosis programmer? Do you spend too much time sitting? Maybe you look a bit flabby? Super tired at and after work? High chances are that some of these are true for you. Some certainly are true for me. This year I tried to improve my situation by running. Here are my thoughts and humble recommendations.

Running and its impact on your Health

“Being physically active reduces the risk of heart disease, cancer and other diseases, potentially extending longevity.” – many studies show accordingly to this article. Probably one of the most accessible forms of exercising is running. All you need to start is just pair of shoes. This research paper is a good resource on learning about impact of running and other exercises on chronic diseases and general mortality.

So why running? – Because it is easy to start with and because we are made for it. “Humans can outrun nearly every other animal on the planet over long distances.” – says this article. Funnily enough there is yearly Human vs. Horse marathon competition.

If you ask yourself how you want your last two decades before death look like, most likely you would picture a healthy, mobile, and socially active person. Also you would prefer those two decades better be 80’s and 90’s. Right? Light running for as little as 1 hour a week could add as much as 6 years to your life. This long-term study showed that “the age-adjusted increase in survival with jogging was 6.2 years in men and 5.6 years in women.” (For those who are pedantic and want to know net win: (2 hrs/wk * 52 wks a year * 50 years) / 16 day hours = 325 days lost to running still gives you net 5 years).

Motivation

At my age I do not think about the death that much. My main motivation for running is improvement of my health. I know that for many people extra weight is motivating factor. For me it is not as instead of loosing weight I gained some 2-3 kg. Likely I’m so skinny there is no way to loose fat, though there is room for leg muscles growth. Unfortunately running is often boring and it is very hard to get yourself outside and go for a run on that nasty cold day. Here are few things that helped me running this year:

Run different routes

Always running at same location taking same path is boring. If you travel somewhere, just take your shoes with yourself and have a run at new place. Not only you get to have another run, but you explore the location. I ran in five different countries this year and could tell that those runs are more interesting than usual next to home ones.

Join friends or running club

I was going for runs rather rarely at the beginning of the year, but later as I started running with friends I started to run more frequently. It is always much more pleasant to have a conversation and learn few new things from friends, especially if areas of your interest overlap more than just running.

Sign up for a challenge

Sports are competitive by nature. You can have friendly competition with your fellow runners, or you can take a virtual challenge. That works great because the clock is ticking and you want to have it done. This September I took it to the next level by signing up to Strava monthly challenges. I have completed all of them. See Trophy Case below:

Be careful and avoid injury

You don’t want to walk with a cane when old because you were too stupid and run too much and too hard when young. I’m sick of running because of this September challenge. I completed it, but last two runs I ran through the pain being injured. I’m recovering now using RICE recovery technique. From now on I will take it easier. Suggesting the same for you.

You can do it

I’m not a good runner. At the beginning of the year I could barely run 5km, I didn’t know how I will complete my planned 40 runs as it was so hard. Running 40 times was my main year goal. I did not expect that I will ran for around 50 times totaling 320 km. And it is not the end of the year yet. I also ran a Half-Marathon distance running a top Kahlenberg hill next to Vienna. If I can do it – you can!

Conclusion

I completely agree with research and studies that “for majority of people the benefits of running overweight the risks” and at the same time I voluntarily ran through the injury just to complete my challenge. Motivation is an important factor, but runners have to be careful and moderate their exercising. This is especially true if you run for the health reasons. Just try to make your runs more interesting and enjoy your life… longer.

Working with Excel files using EPPlus and XLSX.JS

Need to quickly generate an Excel file on the server (.NET) with no headache or need to import another Excel file in your JS client?

I can recommend two libraries for their simplicity and ease of use.

XLSX.JS

To smooth transitioning from Excel files to electronic handling of data we offered our users possibility of importing data. As our application is web based it meant some JS library to work with Excel files. A bit of complication was that our users over time developed a habit of having all kinds of modifications in their “custom” Excel files. So something that would allow us easily work with different formats was a preference.

XLSX.JS library available on GitHub proved to be a good choice. I could only imagine how much better it is over some monsters that would only work in IE. I think starting documentation is fairly good, so I will just go through some bits and pieces from our use case.

Setting up XLSX.JS and reading files is straight forward: npm or bower, include of file and you are ready to write XLSX.readFile('test.xlsx') or App.XLSX.read(excelBinaryContents, {type: 'binary'}).

Reading as binary is probably a better bet as it will work in IE, though you will have to write some code to implement FileReader.prototype.readAsBinaryString() in IE. You can have a look at our implementation of file-select component on gist.

Using XLSX in your JavaScript is fairly easy, though there might be some hiccups with parsing dates. See this gist.

EPPlus

We also have two use cases where we need to generate Excel file on the server. One was to generate some documentation for business rules so we can have it up to date and share with our users at all times. It was implemented as part of CI that would save a file to a file system. The other use case was downloading of business related data via web interface. These two were super easy to do with open source library called EPPlus.

You just add EPPlus through NuGet and start using (var excelPackage = new ExcelPackage(newFileInfo)). See the gist below. First file demonstrates how to operate with cells and the other one just shows how you can use streams to make file downloadable.

These two libraries really helped me to efficiently implement some of the Excel file business use cases.

Next time I will have to generate Excel file on server or read it on client I will most certainly use these two again.

Beauty of Open Source in Practice

December 20, 2015 Opinion 2 comments

We found a bug in Internet Explorer. It was acknowledged as such in two months of exhausting e-mail communication and no fix was promised.

We found a bug in open source library. It got fixed over weekend after we raised an issue.

This summarises everything I wanted to share in this post. I have more, so continue reading.

As a disclaimer, I want to say that I don’t attempt to have a comprehensive look at open source versus closed source. This is just an example of what happened in my project.

Closed source code case with Internet Explorer

We use Microsoft technologies wherever possible unless there is no sensible solution to a problem we need to solve. Application we implemented is a large offline capable single page application with tons of controls rendered at once. We noticed that IE crashes after prolonged usage of the app, though we were not experiencing the same in other bowsers. It took us a while to realise that there was a legitimate memory leak in IE. More details on how we tried to troubleshoot the issue are here. Afterwards we started long and boring communication with Microsoft, which ended in them acknowledging a bug in IE. Actually, it was a known bug. They said that attempts to fix this bug caused them more troubles, so it is unlikely that the fix will appear in any of IE11 updates or in Edge browser. We got an approval for our users to use Chrome as it doesn’t have this memory issues and in general is much faster.

Open source code case with Jurassic

The app has plenty of shared logic that we want to execute both on client and server. We decided that we want it to be written in JavaScript. As our backend is .NET we used Jurassic library to compile JavaScript code on the server and then to execute it whenever we needed it. We also tried to use Edge.js, but at the moment we are not happy about its stability when run under IIS.

We stumbled upon an interesting bug. IL code emitted by Jurassic library was causing System.InvalidProgramException on some environments. We narrowed it down to a continue statement used in for loop. We noticed that this was only used in moment.js library. We modified the code of moment.js to avoid using continue statements. This fixed the issue so were already covered by open source since we could modify it. Of course, we didn’t stop there and posted a bug on Jurassic’s forum. The guy had a look over weekend and fixed the issue for us.

Conclusion

Of course, this is just one example where using open source proved to be a nice way to go. It doesn’t always work like that and at times it is a wrong choice. I mainly wanted to share this as it was such a striking and contrasting difference for me personally.

Backup and Restore. Story and thoughts

April 7, 2014 HowTo, Opinion No comments

Usually when I have some adventures with backing-up and restoring I don’t write a blog post about that. But this time it was bit special. I unintentionally repartitioned external drive where I kept all of my system backups and large media files. This made me rethink my backup/restore strategy.

The story

I will begin with the story of my adventures. Currently I’m running Windows 8.1. The other evening I decided I want to play old game from my school days. Since I couldn’t find it I decided to play newer version still relatively old – released in 2006. As usual with old games and current OSs it wouldn’t start. As it is normal for programmer I didn’t give up. First of all there was something with DirectX. It was complaining that I need newer version, which I of course had, but it was way too new for the game to understand. After fixing it game still wouldn’t start because of other problems. I have changed few files in system32. It still didn’t help. Then I decided on other approach – installing WinXP on virtual machine and run it there. I did it with VirtualBox and it didn’t work because of some other issues. Then I found Win7 virtual machine I used before for VMware, but that VM didn’t want to start.

At this point I decided to give up with that game. So to compensate I started looking for small game I played in university. Unfortunately the other game also didn’t want to start by freezing my PC. After reboot… ah… actually there was no reboot since my Windows made its mind not to boot any longer!

Now I had to restore. Thankfully my Dell laptop had recovery boot partition and I was able to quickly restore to previous point in time. Not sure why Windows didn’t boot if recovery wasn’t pain at all.

After that happened I decided that I need additional restoring power. So I ran program by Dell called “Backup and Recovery” to create yet another backup of the system. Program asked me for drive and I found one free on my external HDD where I keep system images. Unfortunately I didn’t pay attention to care about what that special backup might do. It created bootable partition and of course repartitioned entire drive. I pulled USB cable when I realized what it started to do!

I had to recover again, but now files on repartitioned drive. If you look online there are some good programs that allow you to restore your deleted files and even find lost partitions. One of such is EaseUS, but it costs money and I didn’t want to pay for one time. Thus I found one free called “Find And Mount” that allows to find lost partition and mount it as another drive so I can copy files over. That’s good but for some reason speed of recovery was only 512Kbit/s so you can imagine how much time it would take to recover 2TB of stuff. I proceeded with restoring only the most important stuff. Maybe in total restoring took like 30+ hours.

Bit more on this story. Since I needed to restore so much stuff I didn’t have space for it. My laptop is only 256Gb SSD and my wife’s laptop (formerly mine) also has only that much. But I had 512 HDD left aside. So I just bought HDD external drive case for some 13 EUR and thus got some additional space.

So that was end of story. Now I want to document what I do and want to start doing in addition to be on the safe side.

Backup strategy

What’s are the top most important files not to lose? – These are photos and other things that are strongly personal. I’m pretty sure if you have some work projects they are already under source control or handled by other parties and responsible people. So work stuff is therefor less critical.

My idea is that your most important things should be just spread as much as possible. This is true for photos I have. They are just on every hard drive I ever had. At least 5 in Ukraine and 4 here in Austria. Older photos are also on multiple DVDs and CDs. Some photos are in Picasa – I’m still running old offer from Google 20Gb just for 4$ per year. All phone photos are automatically uploaded to OneDrive with 8Gb there. Also I used to have 100Gb on DropBox but then I found it too expensive so stopped keeping photos there.

All my personal projects and things I created on my own are treated almost the same as photos, only they are not so public, often encrypted.

So roughly backup strategy:

- Photos and personal – as many copies as possible and at as many physical places as possible, including cloud storages

- System and all other – System Images and bootable drive, including usage of “File History” feature in Windows 8.1

I started to think if I want to buy NAS and some more Cloud. For now will see if I can get myself into a trouble again and what it would cost if it happens.

On the image below Disk 0 is my laptop’s disk. Disk 1 is where I now have complete images of 2 laptops at home, complete copy of Disk 2 and also Media junk. Disk 2 is another drive with Windows installed, which will now be used regularly for backups and for “File History”.

Now some links and how-to info:

- Find and Mount application for restoring partitions

- EaseUS application with lots of recovery options, but costs money

- Enabling “File History” and creating System Image in Win8.1 can be done from here: Control PanelSystem and SecurityFile History

- External USB 3.0 HDD 2.5’’ Case I bought

- Total Commander has features to find duplicate files, synchronize dirs and many other which come handy when handling mess after spreading too many copies around

This was just a story to share with you but it emphasises one more time that backups are important.

P.S. I finally managed to play newer version of 2nd game :)

Not attending NDC2014 in Oslo

March 24, 2014 Conferences, Opinion No comments

What a silly title for the post. What if everyone wrote about what they are NOT doing?

Well, I write this blog post partially to convince myself that decision on not attending NDC2014 is right and partially to share my thoughts on such aspects of attending conferences as price and return of investment.

I always wanted to visit some big developer’s conference. One of the conferences that I definitely like is NDC (either in Oslo or London). I think it has best mix of technologies that match my interests and at the same time has big names in it.

I wanted to go there for 4 times. First time I just asked my company in Ukraine, but it would be too expensive for them and I don’t know if they ever sent people to such conferences. Second time I asked company here in Austria, but I was new employee to send me somewhere – I didn’t have a chance to prove that I worth it. Third time I just couldn’t do that because my daughter was about to be born. And this summer I’m not going to allow myself to attend it on my own.

Reason is simple – price. I’ve calculated that it would cost me around 2600 Euro (~3600$) to attend it. This includes tickets for the conference and flights, hotel, food and 3 working days. Organizers wouldn’t give me a discount.

Being self-employed makes you really consider such things as conferences from manager’s or company’s perspective. You start to think about return of investment and how to justify conference attendance. Real question is if money spent on conference will benefit you correspondingly.

NDC videos are available online shortly after conference. It is not a secret that what people get from conferences is not content of presentations but possibility to establish relations with gurus who are at the top in the industry. I heard this so many times. One of my old friends said that conference really starts when presentations are over and people get together at lunch or dinner or at party.

Taking this into account it is very hard for me to believe that I could establish good connections at any conference. I’m usually shy, especially when it comes to social events and new connections. I don’t think that I’m only one who has this problem. Probably most of developers to some extend have similar issues. I found some articles on how you can attend conferences as yourself. But point that I’m trying to make is that usually it is company that pays for their employees therefor developers are not that much concerned about the price and of course they are happy to attend. I would also be happy if someone paid for me. Some say that conferences are often just a reward for best developers for being loyal to their companies.

I concluded for myself that from learning perspective I would not gain much from this conference and from networking perspective it doesn’t worth so much money. Of course there is tiny chance that I’m overlooking some big opportunity.

I believe that if I spend just half of those money on small conferences and tools/learning I will get more in return.

This beginner’s guide to attending conferences is quite useful. I think I will use some of the provided hints when I’ll be attending smaller conferences.

One last thing, probably ultimate goal for any attendee of any conference is to grow to the level when you are invited as one of key speakers.

Micro Agile for Managing Your Work. Pomodoro New Way

January 22, 2014 Agile, Opinion 3 comments

Pomodoro

Micro Agile

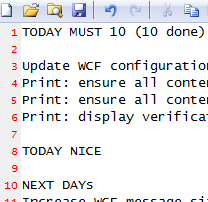

Here is how top of my working tasks list looked like in the end of a day:

I’ve got nice count of 10 commit items awaiting to be pushed to the server.

![clip_image001[4] clip_image001[4]](http://andriybuday.com/wp-content/uploads/2014/01/clip_image001-25255B4-25255D_thumb.png)

Time bought and fulfilment of work being done

Have you noticed number 10? Why 10? And what should it mean?

10 a day is my limit at the moment!

What if something new pops up?

There is one special rule: You can always interrupt to add task to your list. Apparently when you work on task some items pop-up, if you address everything at that moment you won’t be able to complete your main pomodoro. Instead what you can always do is to switch screen type task and again switch to your work.

What if new big stuff comes up on your way?

How many personal pomodoros there should be?

How to stick to this technique?

To summarize

- Break your tasks into 25 minutes activities

- If it is big task break only for the day

- Reorganize your tasks accordingly (best done in the morning)

- At any point of time you can add items to your list

- Never distract for anything else

- Completion is your main goal and to make it certain set some done barrier, like having code commit in the end

- Buy time by limiting number of pomodoros you have for the day

- Squeeze your personal pomodoros or leave them for the end of a day

- Always complete min number of pomodoros per day

- Force yourself to use this technique when you are distracted from work

Application Fabric Cache – an easy, but solid start

I would like to share some experiences of working with Microsoft AppFabric Cache for Windows Server.

AppFabricCache is distributed cache solution from Microsoft. It has very simple API and would take you 10-20 minutes to start playing with. As I worked with it for about one month I would say that product itself is very good, but probably not enough mature. There are couple of common problems and I would like to share those. But before let’s get started!

If distributed cache concept is something new for you, don’t be scary. It is quite simple. For example, you’ve got some data in your database, and you have web service on top of it which is frequently accessed. Under high load you would decide to run many web instances of your project to scale up. But at some point of time database will become your bottleneck, so as solution you will add caching mechanism on the back-end of those services. You would want same cached objects to be available on each of the web instances for that you might want to copy them to different servers for short latency & high availability. So that’s it, you came up with distributed cache solution. Instead of writing your own you can leverage one of the existing.

Install

You can easily download AppFabricCache from here. Or install it with Web Platform Installer.

Installation process is straight forward. If you installing it to just try, I wouldn’t even go for SQL server provider, but rather use XML provider and choose some local shared folder for it. (Provider is underlying persistent storage as far as I understand it.)

After installation you should get additional PowerShell console called “Caching Administration Windows PowerShell”.

So you can start your cache using: “Start-CacheCluster” command.

Alternatively you can install AppFabric Caching Amin Tool from CodePlex, which would allow you easily do lot of things though the UI. It will show PowerShell output, so you can learn commands from there as well.

Usually you would want to create named cache. I created NamedCacheForBlog, as can be seen above.

Simple Application

Let’s now create simple application. You would need to add couple of references:

Add some configuration to your app/web.config

<section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core" allowLocation="true" allowDefinition="Everywhere"/> <!-- and then somewhere in configuration... --> <dataCacheClient requestTimeout="5000" channelOpenTimeout="10000" maxConnectionsToServer="20"> <localCache isEnabled="true" sync="TimeoutBased" ttlValue="300" objectCount="10000"/> <hosts> <!--Local app fabric cache--> <host name="localhost" cachePort="22233"/> <!-- In real world it could be something like this: <host name="service1" cachePort="22233"/> <host name="service2" cachePort="22233"/> <host name="service3" cachePort="22233"/> --> </hosts> <transportProperties connectionBufferSize="131072" maxBufferPoolSize="268435456" maxBufferSize="134217728" maxOutputDelay="2" channelInitializationTimeout="60000" receiveTimeout="600000"/> </dataCacheClient>

Note, that above configuration is not the minimal one, but rather more realistic and sensible. If you are about to use AppFabric Cache in production I definitely recommend you to read this MSDN page carefully.

Now you need to get DataCache object and use it. Minimalistic, but wrong, way of doing it would be:

public DataCache GetDataCacheMinimalistic() { var factory = new DataCacheFactory(); return factory.GetCache("NamedCacheForBlog"); }

Above code would read configuration from config and return you DataCache object.

Using DataCache is extremely easy:

object blogPostGoesToCache; string blogPostId; dataCache.Add(blogPostId, blogPostGoesToCache); var blogPostFromCache = dataCache.Get(blogPostId); object updatedBlogPost; dataCache.Put(blogPostId, updatedBlogPost);

DataCache Wrapper/Utility

In real world you would probably write some wrapper over DataCache or create some Utility class. There are couple of reasons for this. First of all DataCacheFactory instance creation is very expensive, so it is better to keep one. Another obvious reason is much more flexibility over what you can do in case of failures and in general. And this is very important. Turns out that AppFabricCache is not extremely stable and can be easily impacted. One of the workarounds is to write some “re-try” mechanism, so if your wrapping method fails you retry (immediately or after X ms).

Here is how I would write initialization code:

private DataCacheFactory _dataCacheFactory; private DataCache _dataCache; private DataCache DataCache { get { if (_dataCache == null) { InitDataCache(); } return _dataCache; } set { _dataCache = value; } } private bool InitDataCache() { try { // We try to avoid creating many DataCacheFactory-ies if (_dataCacheFactory == null) { // Disable tracing to avoid informational/verbose messages DataCacheClientLogManager.ChangeLogLevel(TraceLevel.Off); // Use configuration from the application configuration file _dataCacheFactory = new DataCacheFactory(); } DataCache = _dataCacheFactory.GetCache("NamedCacheForBlog"); return true; } catch (DataCacheException) { _dataCache = null; throw; } }

DataCache property is not exposed, instead it is used in wrapping methods:

public void Put(string key, object value, TimeSpan ttl) { try { DataCache.Put(key, value, ttl); } catch (DataCacheException ex) { ReTryDataCacheOperation(() => DataCache.Put(key, value, ttl), ex); } }

ReTryDataCacheOperation performs retry logic I mentioned before:

private object ReTryDataCacheOperation(Func<object> dataCacheOperation, DataCacheException prevException) { try { // We add retry, as it may happen, // that AppFabric cache is temporary unavailable: // See: http://msdn.microsoft.com/en-us/library/ff637716.aspx // Maybe adding more checks like: prevException.ErrorCode == DataCacheErrorCode.RetryLater // This ensures that once we access prop DataCache, new client will be generated _dataCache = null; Thread.Sleep(100); var result = dataCacheOperation.Invoke(); //We can add some logging here, notifying that retry succeeded return result; } catch (DataCacheException) { _dataCache = null; throw; } }

You can go further and improve retry logic to allow for many retries and different intervals between retries and then put all that stuff into configuration.

RetryLater

So, why the hell all this retry logic is needed?

Well, when you open MSDN page for AppFabric Common Exceptions be sure RetryLater is the most common one. To know what exactly happened you need to verify ErrorCode.

So far I’ve see this sub-errors of the RetryLater:

There was a contention on the store. – This one is quite frequent one. Could happen when someone is playing some concurrent mess with cache. Problem is that any client can affect the whole cluster.

The connection was terminated, possibly due to server or network problems or serialized Object size is greater than MaxBufferSize on server. Result of the request is unknown. – This usually has nothing to do with object size. Even if configuration is correct and you save small objects you can still get this error. Retry mechanism is good for this one.

One or more specified cache servers are unavailable, which could be caused by busy network or servers. – Have no idea how frequent this one could be, but it can happen.

No specific SubStatus. – Amazing one!

Conclusion

AppFabricCache is very nice distributed cache solution from Microsoft. It has a lot of features. Of course not described here, as you can read it elsewhere, say here. But to be able to go live with it you should be ready for AppFabricCache not being extremely stable & reliable, so you better put some retry mechanisms in place.

To be honest if I was one to make decision if to use this dcache, I would go for another one. But who knows, maybe other are not much better… I’ve never tried other distributed caches.

Links

Thank you, and hope this is of some help.

Існує die Frage of Language. Или нет?

April 23, 2012 Languages, Opinion 6 comments

Title of the post is complete bizarre(*). It consists of words out of 4 languages I have to deal with now.

Before I moved to Austria I mostly used Ukrainian. Of course, many meetings at work were in English and all mailing was held in English. Not to mention, there was some interaction with Russian, but not much. At least there were no real need to speak it. Now everything has shifted. I knew that I will have to deal with English everyday, I also knew that I will need some basic German. What I did not know is that there will be many guys from Ukraine and Russia at work and I will use Ukrainian and also Russian for small talks in kitchen or at lunch or for one-to-one discussions.

I continue to speak Ukrainian at home with my wife. We try to use English/German phrases. My wife is not good in English, instead her German is at intermediate level, so we try to exchange some knowledge in languages. But you know what? Unless someone or something kicks you in the ass, you won’t take learning of language seriously.

So, I paid 290 euro to have someone kicking me each day for 2 hours during whole month. Normally it is called language course. After one week I can introduce myself and provide brief information about myself, I can count and ask basic questions, I already know some colors, week days, months, restaurant words, etc.

It worth to mention, that you really need some pressure to start learning German in Austria, because all people around speak English very good and if you are lazy you can simply avoid conversations in German. Plus everything here could be done via internet or though automatic devices, so not much human interaction during the day.

I’m afraid for foreigners coming to Ukraine for EURO 2012. On average people don’t speak English in Ukraine. It is pity and shame for me.

Now back to German language courses. As I mentioned, I’m attending intensive evening courses for total beginners. I allocate myself 1 hour before class to do my home work. So in total it is 3 hours of German per day. My group is rather small – only 4 people, me and one girl from Ukraine, lady from Kazakhstan and another girl from Iran. If there are some explanations needed they are provided in German, if not understood in Russian or English (only girl from Iran doesn’t understand Russian). Another very interesting thing is that, as school is concentrated in Russian/German, teacher is not extremely good in English. Thus I often help to explain things to girl from Iran who is proficient in English. For me it is great – I hear explanations twice: in English and Russian.

To your surprise there are many words which sound similar to English and some are similar to Russian and Ukrainian words (or probably otherwise). Germany/Austria geographically are located between Great Britain and Russia/Ukraine so it could be understood without reading dozen wiki pages on language families, branches and their roots. Again, good for me.

Nevertheless, I have this question: “Is German important language anyway?” Accordingly to wiki there are about 100 millions German speakers in the world, so 12th place by number of speakers, but apparently it is number one in Europe where I live now. It is highly developed language, it is also language of technology (after English of course). All these sound great and everyone would answer that German is important language for Europe, especially if you already know English. So would I. In short term it makes sense to learn German. But in centuries world will dramatically shift to English, if not Chinese.

This all makes me think about importance of languages, their meaning for me and their value for world. Imagine there are no other languages, but just one, no matter which, how much would world be easier? The most importantly, how much further would we develop? Would we already start to colonize Mars? Or would it have opposite effect? Accordingly to Darwin there should be some deviation, otherwise no evolution could be progressing. All these are very philosophical questions and suitable for beer evening, or… for Friday snaps evening.

To conclude, I’m very proud to realize I will understand almost 1 billion people in the world after I learn German (precisely, 902 millions as per wiki).

I have some questions for you:

-

What languages do you know?

-

Do you learn any?

-

Do you think English is number one language and there is no sense to learn and develop other languages?

-

Would you learn German if you were me?

-

Do you think it is possible to be high in IT/Software industry for not native speakers of English?

Thank you!

P.S. Hope this was good reading. If not, please let me know. I’m willing to improve my blogging skills to write posts of higher quality. All for you.

(*) In English it would be “There is question of Language. Or not?”.

100% Code Coverage – real and good!

April 18, 2012 Opinion, UnitTesting No comments

I’m not going to write a long post discussing advantages and disadvantages of high code coverage. There are hundreds if not thousands of such posts out there and in the end almost all of them conclude that high code coverage in general is nice but not always justifiable, one of main reasons being redundant abstractions in favor of higher coverage. Here are my recent thoughts.

Achieve 100% Code Coverage by all means

It may sound crazy and not doable at all(*) or may have side effects if misused. I suggest very simple techniques to achieve high code coverage the right way:

Don’t be lazy. Recently I worked on a project and I already had 95% coverage. If I haven’t decided to increase coverage further I wouldn’t have found one missing mapping for a property. I maybe spent couple of hours to write more tests, instead of days of devs/testers/managers time to work around the bug. And in case of finding this in production it would cost real money for the company.

Work around external dependencies you really cannot test. Isolate them as much as you can and simply exclude from coverage report. I don’t think this is cheating. It is the best you can do, plus you do it explicitly. And, of course, you should have integration tests to test external dependencies.

Remember the Single Responsibility. Well… and few more things. You will be amazed how much code is simpler and easier to read if you just keep following SOLID. I think that developer should be able to clearly describe responsibility of a single class within one sentence.

Start with testing in mind, not with coverage number. It is vital to keep in mind that tests are intended to ensure you code works as designed and without defects, tests are NOT intended for high coverage numbers, which can be shown to boss. Thus always have tests to cover more important and sensitive code at first and only then move towards covering less important or easy to test code.

Refactor! Never write code you don’t like. It is fine to hate it the next day, but not at the moment when you are writing it. Usually crap code starts to appear when you try to add functionality which was not planned before. You must refactor constantly (same is applicable for your unit tests). Keep everything in synch.

Be a 100% good programmer. Don’t spoil yourself with 80% coverage or with just 60%. If someone says you are 75% good programmer, would you like it? Well, it is high number, isn’t it? I was worse developer few months ago than I’m today. Year ago I would disagree with today’s myself. High coverage, if used right, means that you know that your code works and that it is readable/refactorable/decoupled/structured/… and most of all – it is highly maintainable.

I hope my opinion sounds sensible!

Till next time…

Further reading:

-

I strongly recommend to read this paper: “How to Misuse Code Coverage”

-

There are research papers on this matter. In “Experiments of the effectiveness of dataflow and control flow-based test adequacy criteria” authors “evaluate all-edges and alluses coverage criteria using an experiment with 130 fault seeded versions of seven programs and observed that test sets achieving coverage levels over 90% usually showed significantly better fault detection than randomly chosen test sets of the same size. In addition, significant improvements in the effectiveness of coverage-based tests usually occurred as coverage increased from 90% to 100%.” – from MS research paper.

(*) I could agree with many exceptional situations you are thinking about. I would agree that with old systems it is difficult to do what I ask you to, I would also agree that if there are deadlines it is hard to stand against. There are bad programmers around, bad decisions taken and many other conditions. In the end it is your job to do the job right. And if you cannot, change the company or change the company.