December 11, 2016 Personal 6 comments

My Dell Laptops History And New Dell Precision 7510

Time has come to buy a new beefy laptop for my blogging :). This time I bought Dell Precision 7510.

I have a history of buying Dell laptops. You can call me a fan of Dell if you want, but really I just continue buying them because they work and I have never had any issues with them, except of when I spilled tea on my XPS 13 and had to replace keyboard and screen. I’ve made few upgrades to XPS16 (RAM, SSD, battery) and now it is being actively used by my wife for some photo editing and general home use. XPS13 in some aspects is as powerful as XPS16 and at the same time weights only 1.3 Kg. It is really easy to carry everywhere. When I bought it I said that it is “thin as “Mac Air” and powerful as “Mac Pro” but costs less”. Unfortunately over time I could not feel very productive on it. Even though I could do everything I needed, I couldn’t pleasantly run heavy IDE or VMs or play games that required dedicated graphics. It felt like I needed a proper workstation.

Decision making on a new workstation went terribly wrong. I spent around 8 hours comparing options:

I was seriously considering desktop PC instead of laptop, but eventually leaned towards powerful laptops that can be easily docked if needed. I was choosing between different Lenovo and Dell (yeap, no Mac). I stopped on Precision 7510 because it is real working station. It comes with thunderbolt interface, it is highly configurable and it is a brand I used for a long time. Another reason for choosing Dell was pricing. Since I was buying at dell.at as a small business I was able to customize my purchase very granularity: removed unnecessary support and useless stickers, selected Ubuntu OS and cheap delivery – something Lenovo was not offering. As of hardware I have chosen to reasonably max those things that I’m not going to upgrade (CPU, GPU) and leave room for other upgrades (RAM, HDD). I didn’t choose 4K touch monitor, as I don’t think it makes any sense on 15″.

Here below are some specifications for all of my Dell Laptops:

Dell Studio 1535

|

Dell Studio XPS 1647 |

Dell XPS 13 |

Dell Precision 7510 |

| Intel® Core™ 2 Duo T5850 2.16GHz | Intel® Core™ i7-620M (Prev Gen, 2 Cores, 4 Threads, 4M Cache, up to 3.33GHz) | Intel® Core™ i7-3537U (3rd Gen, 2 Cores, 4 Threads, 4M Cache, up to 3.1GHz) | Intel® Core™ i7-6920HQ (6th Gen, 4 Cores, 8 Threads, 8M Cache, up to 3.80 GHz) |

| LCD (1280×800) | 15.6″ FHD Widescreen WLED LCD (1920×1080) | 13.3″ Hi-Def (1080p) True Life WLED Display W/1.3MP | 15,6” UltraSharp FHD IPS (1920×1080) |

| DVD Super Multi | 8X CD/DVD Burner | – | – |

| 2GB DDR2-667 | 8GB Shared Dual Channel DDR3-1333MHz

(originally 4GB) |

8GB Single Channel DDR3-1600MHz | 16 GB (2 x 8 GB) DDR4-2667 MHz (two more slots available) |

| 320GB 5400RPM | 256GB SSD (originally 512GB 7200RPM) | 256GB SSD | 256GB M.2 PCIe SSD (I added a second 512GB 7200RPM HDD) |

| ATI Mobility Radeon™ HD 3450 | ATI Mobility Radeon™ HD 5730 1GB GDDR3 | Intel HD Graphics 4000 | Nvidia Quadro M2000M 4GB GDDR5 |

| High Definition Audio | High Definition Audio 2.0 with SRS Premium Sound | Wave Maxx Audio | Some Audio |

| Dell Wireless 1397 WLAN Mini-Card | Intel® 5300 WLAN Wireless-N (3×3) Mini Card | Killer Wireless-N, 1202 for Video & Voice w/ BT 4.0 | Intel® Dual Band Wireless-AC 8260 |

| 56 WH, 6 cell, LI-ION | 85 WH, 6 cell, LI-ION | 47 WH, 6 cell, LI-ION | 72, 6 cell, LI-ION |

| Bought late 2008, alive and used by Mom for Skype, audio-jack bad, battery dead. | Bought Sep 2010, heavily used, became loud, upgraded with RAM and SSD, battery replaced. | Bought Nov 2013, actively used, no upgrades, screen and keyboard replaced because of tea spill, battery completely healthy. | Bought Dec 2016, using it right now, added second HDD, planning for more RAM when time comes. |

I have ran benchmark software on XPS16, XPS13, and Precision. While 16 and 13 were somewhat comparable, Precision speed rocked. CPU speed was 2X of 3X faster depending on calculation operations (floating, integer). GPU speed was 14X as compared to XPS13 and 3X as compared to XPS16. RAM was 3X of XPS16 and 1.5X of XPS13. SSD write speed was 2X of both.

Lots of numbers, but I can simply feel the difference. It is a pleasure to use a fast machine. Who knows what my fifth column will look like.

Do not misuse or over abstract AutoMapper

November 27, 2016 AutoMapper, Opinion 15 comments

AutoMapper is a great little library every .NET project is using (well, lots of them). I used it for the first time in 2010 and wrote a blog post about it.

Since that time I observed few things:

- Almost every project I worked on, that needed some kind of object mapping, was using this lib. In rare cases there was some pet library or manual mapping in place.

- Almost every project had some abstraction over the library like if it was going to be replaced or like different implementation for mapping would be needed.

- Basic API of the library didn’t change at all.

CreateMapandMapare still there and work the same. At the same time performance, testability, exception handling, and feature richness got improved significantly. Last one, in my opinion, is not such a good thing as it leads to the next point. - In many of those projects AutoMapper was simply misused as code placed in

AfterMapor in different kinds of resolvers would simply start containg crazy things. In worst of those cases actual business logic was written in resolvers.

I have always been of an opinion:

Less Code – Less Bugs; Simple Code – Good Code.

Having seen this trend with the library, I would like to suggest simplifying its usage by limiting ourselves. Simply:

- Use AutoMapper only for simple mapping. Basically, one property to one property. Preferably, majority of property mapping is done by the same name. If you find yourself in situation when over half of your mappings are specified explicitly in

ForMembermethod it may be the case for doing it manually (at least for the specific type) – it will be cleaner and less confusing. - If you have some logic to add to you mapping, do not add it via AutoMapper. Write a separate interface/class and use it (via DI) where your logic has to be applied. You will also be able to test it nicely in this way.

- Do not abstract AutoMapper behind interfaces/implementations. I’ve seen abstracting this in a way that you need to create a class (empty in many cases) for each mapping type pair and somewhere there would be custom reflection code that initializes all of the mappings. Instead, use built-in AutoMapper

Profileclass andMapper.Initializemethod. If you still want to have at least some abstraction to avoid referencing AutoMapper everywhere make it simple.

Here is how I’m using AutoMapper these days:

Somewhere in CommonAssembly a very-very simple abstraction (optional):

Somewhere in BusinessLogicAssembly and any other where you want to define mappings (can be split in as many profiles as needed):

Somewhere in startup code in BootstrappingAssembly (Global.asax etc):

And here is the usage:

That’s it. I do not understand why some simple things are made complex.

There is also another advantage of keeping it minimalistic – maintainability. I’m working on a relatively new project that was created from a company’s template, as a result it had older version of AutoMapper abstracted. To upgrade it and keep all old interfaces would mean some work as abstraction used some of the APIs that did change. Instead I threw away all of these abstractions and upgraded the lib. Next time upgrading there simply will be way less code to worry about.

Please let me know if you share the same opinion.

Synch Two Git Repositories Using Bundle File And USB-stick

November 16, 2016 git, Tools No comments

Imagine working on the same code base in two disconnected networks. How would you synchronize your repositories using rudimentary storage device, like a USB-stick?

Undeniably for such a synchronization there could be multiple solutions starting with very primitive manual copying of cloned repositories finishing with some specialized devices and synch processes.

I came up with something intermediate, until the situation with the setup of project changes.

Idea is very simple:

1. USB-sharing device, so that USB-stick can be shared with a press of a button (physical in this case)

2. git bash script that does the following:

- Tries to connect to both repositories to identify which one is accessible

- Fetches sources from available repository

- Fetches sources from bundle file on USB-stick (git bundle file is like a zip file with history and all files)

- Tries to merge these two folders with flag –allow-unrelated-histories so that history is completely preserved

- If merge succeeds it pushes changes to available repository and recreates bundle

- If merge fails, you would need to manually resolve conflicts and push

3. A task to trigger the synch script when USB-stick with bundle is connected (I do not have this one yet, but it is a next logical step)

If two repositories were available at the same time the same script (with modifications) could be used to synchronize them on schedule or trigger event.

Here is the code of the script:

I also make it available on github under MIT license. Hopefully it comes in handy.

Single Git Repository for Microservices

November 14, 2016 Opinion No comments

Just recently I joined a team. We write intranet web application. There is nothing too special about it, except that it was designed to be implemented as micro-services and as de-facto at the moment it is a classical single .NET MVC application. This happened for a simple reason: meeting first release deadline.

The design was reflected in how source control was set up: one git repository per each service. Unfortunately this caused a number of required maneuvers to be in synch and to push changes as team was making scattering changes in multiple repositories. This also made it more difficult to consolidate NuGet packages and other dependencies as all of them were in different repositories.

I think that microservices and corresponding hard reflection of their boundaries in form of source code repositories should evolve naturally. Starting with a single repository sounds more reasonable. If you keep the idea of microservices in you head and nicely decouple your code nothing stops you creating new repositories as you service boundaries start to make shape.

Taking this into account we merged repositories into one. There was only question of keeping source code history. Turns out the history can be easily preserved by employing git subtree command and placing all of the service repositories as subdirectories of a new single repository.

As a result, team is working much more effectively as we do not waste time on routine synch and checking who did what where.

Conclusion: Theoretically micro-services should be implemented in their own repositories. That’s true, but in practice for relatively small and new project, with only one team working on it, single repository wins.

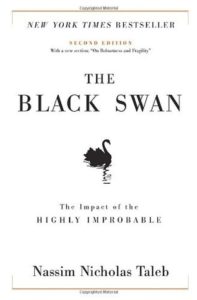

Book Review: “The Black Swan”

October 30, 2016 Book Reviews No comments

A black swan is a highly improbable event with three principal characteristics: It is unpredictable; it carries a massive impact; and, after the fact, we concoct an explanation that makes it appear less random, and more predictable, than it was. The astonishing success of Google was a black swan; so was 9/11. For Nassim Nicholas Taleb, black swans underlie almost everything about our world, from the rise of religions to events in our own personal lives.

I have listened to the audio version of “The Black Swan” twice. First time at the beginning of the year and the second time just recently. The book is philosophical in a way. It is not very easy to fully comprehend conveyed message as author frequently diverts to fictional stories, terms in French, and thinkers that are long time dead.

There were two striking statements in the book “anyone can be a president” if someone like “these people can get a Nobel prize”. Sounds actual? Think of Trump vs. Clinton presidential race and Bob Dylan receiving Nobel prize in Literature if you are not reading this in Autumn 2016.

This does not mean that Nassim Taleb is any sort of predictor or prophesy maker. He himself says that he cannot make predictions, instead he highlights over and over again that rare events that seem improbable do occur more frequently than most of us would imagine and at the same time it is impossible to come up with mathematical models that would somehow calculate probabilities for these events. Unfortunately, we cannot know what we don’t know, therefore the best strategy for any of us is to build robustness to black swan events.

Application of the ideas expressed in the book is very wide. Starting with building financial portfolio consisting of 90% of very safe investments and 10% of extremely risky ones, therefore exposing yourself to probability of catching a black swan, like Google or Facebook. Ending with applying it to your life by exposing yourself to variety of endeavors. Careful here: event’s consequences are even harder to predict than occurrence of such events.

There is one aspect of the book that I don’t like. The author almost throughout the book despises other people imagining them as aggressive apes and suggesting nasty things like putting a rat down someones shirt. I do not exclude that he imagines his readers in the same way: silly monkeys reading higher caliber philosophical work. This, though, does not disqualify his book from being a really valuable contribution to human knowledge, but, in my opinion, it is only thanks to the black swan event of him benefiting from the 2000 crisis that made him successful and subsequently allowed him to write this and other books.

Conclusion

This book is definitely worth reading. It may make you look at the world as sequences of improbable events that change everything around. It could also make you way more skeptical about the theoretical modeling suggested by economists and other tie wearing experts. The book is not an easy read. On the contrary, it requires a lot of attention and thinking. Maybe leave it for a time when you are in a “philosophical” mood.

How to add Microsoft.Extensions.DependencyInjection to OWIN Self-Hosted WebApi

I could not find an example showing how to use Microsoft.Extensions.DependencyInjection as IoC in OWIN Self-Hosted WebApi, as a result here is this blog post.

Let’s imagine that you have WebApi that you intend to Self-Host using OWIN. This is fairly easy to do. All you will need to do is to use Microsoft.Owin.Hosting.WebApp.Start method and then have a bit of configuration on IAppBuilder (check out ServiceHost.cs and WebApiStartup.cs in the gist below).

It becomes a bit more complicated when you want to use an IoC container, as OWIN’s implementation takes care of creating Controller instances. To use another container you will need to tell the configuration to use implementation of IDependencyResolver (see WebApiStartup.cs and DefaultDependencyResolver.cs). DefaultDependencyResolver.cs is a very simple implementation of the resolver.

In case you are wondering what Microsoft.Extensions.DependencyInjection is. It is nothing more than a lightweight DI abstractions and basic implementation (github page). It is currently used in ASP.NET Core and EF Core, but nothing prevents you from using it in “normal” .NET. You can integrate it with more feature-rich IoC frameworks. At the moment it looks like Autofac has the best integration and nice documentation in place. See OWIN integration.

Rest is just usual IoC clutter. See the gist

I hope this blog post helps you with integrating Microsoft.Extensions.DependencyInjection in WebApi hosted via OWIN.

Ember upgrade from 1.7.0 to 1.13.13

October 4, 2016 EmberJS No comments

Obviously there are general upgrade guides provided by Ember team and many fellow bloggers. This is just to document one of the experiences with upgrading from ember 1.7.0 to 1.13.13.

Obviously there are general upgrade guides provided by Ember team and many fellow bloggers. This is just to document one of the experiences with upgrading from ember 1.7.0 to 1.13.13.

At the moment of this writing Ember latest stable version is 2.8. My team was one of the early adopters of Ember. I believe the team started incorporating it in late 2013, which is very soon after the 1.0 release. We went live with the version 1.7 at the beginning of 2015 and since that time we didn’t do any updates for “penny wise and pound foolish” reasons.

Upgrade itself was a bit of pain as it spread for couple of months. We allocated few days per sprint and at the same time continued developing new features the old way in other branches. Bad idea.

Another pain was that we adopted Ember Data while it was still beta. As a result we have custom code altering adapter’s and serializer’s behaviour. There are many breaking changes between beta versions of Ember Data, so using it while in beta was a very bad idea.

One of great things about being on Ember 1.13.13 version is that you are effectively on 2.0.0 version unless you have deprecation messages in your console. This also means that you can still release your application with some parts not being completely converted to the new way of doing things. ember.prod.js doesn’t generate warnings and works just fine. I really like the way Ember tries to make upgrading easy. Here is a nice write up on handling deprecations as of 2.3.0.

List of useful links:

- Ember 1.x Change Log

- Ember Data 1.x Change Log

- The Road to Ember 2.0 and some Slides

- Deprecations Guide for Ember 1.x

Gotcha list:

This list is composed from notes I took so it is not very well organized and does not contain all of the items we had to fix.

When upgrading from 1.7 to 1.8

- Update ember data to 1.0.0-beta.11 for compatibility and as a fix for

“Ember Data cannot read property 'async' of undefined" - Replace

pushObjectwithaddRecordto fix“You looked up the relationship on a with id but some of the associated records were not loaded.” - This does not work and has to be rewritten

Ember.Handlebars.helpers.render.call(this, name, contextString, options) - Use

Ember.set()whenever there wascontroller.isNewproperty with some set

When upgrading from 1.8 to 1.10.1

- Start using

HTMLBarsinstead ofHandlebarsby incorporatingember-template-compiler - add stripBOM to grunt file… as template compiler includes those:

template = template.replace(/\uFEFF/g, ''); // remove BOM - Fix HTML to be correct (closing tags, missing tbody, etc)

- ember-data 1.0.0-beta.11 has bug in findQuery asserts => upgrade ember-data to 1.0.0.beta12

- Remove //# sourceMappingURL=ember-data.js.map

- Ensure models are generated with

{async: false} - Remove code that works with

MetamorphView. Fixes this:Assertion Failed: A fragment cannot be pushed into a buffer that contains content because of: view.createChildView(Ember._MetamorphView, {

When upgrading from 1.10.1 to 1.11.4

At this point you start to get tons of deprecation messages.

- You attempted to access

`someProperty` from `<App.SomeXyzController:ember2661>`, but object proxying is deprecated. Please use `model.someProperty` instead. - Convert this

{{action bubbles=false preventDefault=false}}to this{{action "ok" "close" "cancel" bubbles=false preventDefault=false}} - Fix dynamic compilation of HTMLBars.

Cannot call `compile` without the template compiler loaded. Please load `ember-template-compiler.js` prior to calling `compile`.Ember.HTMLBars.compile(submitHtmlTemplate);doesn’t produce a correct function to retrieve HTML. Can be solved as in this SO answer. - Using

@eachat the end of a computed key is deprecated and will not work in Ember 2.0 - Replace

Ember.Enumerable.mapPropertywithmapBy - Replace

Ember.Handlebars.helperwithEmber.Helper.helper

When upgrading from 1.11.4 to 1.13.13

- Rewrite code that uses

itemController - Rewrite properties that have setter to the new computed syntax

I hope this comes in handy for you.

Running Challenge Completed. Health, Motivation, and Other Aspects Of Running

October 2, 2016 Opinion No comments

Are you bent by scoliosis programmer? Do you spend too much time sitting? Maybe you look a bit flabby? Super tired at and after work? High chances are that some of these are true for you. Some certainly are true for me. This year I tried to improve my situation by running. Here are my thoughts and humble recommendations.

Running and its impact on your Health

“Being physically active reduces the risk of heart disease, cancer and other diseases, potentially extending longevity.” – many studies show accordingly to this article. Probably one of the most accessible forms of exercising is running. All you need to start is just pair of shoes. This research paper is a good resource on learning about impact of running and other exercises on chronic diseases and general mortality.

So why running? – Because it is easy to start with and because we are made for it. “Humans can outrun nearly every other animal on the planet over long distances.” – says this article. Funnily enough there is yearly Human vs. Horse marathon competition.

If you ask yourself how you want your last two decades before death look like, most likely you would picture a healthy, mobile, and socially active person. Also you would prefer those two decades better be 80’s and 90’s. Right? Light running for as little as 1 hour a week could add as much as 6 years to your life. This long-term study showed that “the age-adjusted increase in survival with jogging was 6.2 years in men and 5.6 years in women.” (For those who are pedantic and want to know net win: (2 hrs/wk * 52 wks a year * 50 years) / 16 day hours = 325 days lost to running still gives you net 5 years).

Motivation

At my age I do not think about the death that much. My main motivation for running is improvement of my health. I know that for many people extra weight is motivating factor. For me it is not as instead of loosing weight I gained some 2-3 kg. Likely I’m so skinny there is no way to loose fat, though there is room for leg muscles growth. Unfortunately running is often boring and it is very hard to get yourself outside and go for a run on that nasty cold day. Here are few things that helped me running this year:

Run different routes

Always running at same location taking same path is boring. If you travel somewhere, just take your shoes with yourself and have a run at new place. Not only you get to have another run, but you explore the location. I ran in five different countries this year and could tell that those runs are more interesting than usual next to home ones.

Join friends or running club

I was going for runs rather rarely at the beginning of the year, but later as I started running with friends I started to run more frequently. It is always much more pleasant to have a conversation and learn few new things from friends, especially if areas of your interest overlap more than just running.

Sign up for a challenge

Sports are competitive by nature. You can have friendly competition with your fellow runners, or you can take a virtual challenge. That works great because the clock is ticking and you want to have it done. This September I took it to the next level by signing up to Strava monthly challenges. I have completed all of them. See Trophy Case below:

Be careful and avoid injury

You don’t want to walk with a cane when old because you were too stupid and run too much and too hard when young. I’m sick of running because of this September challenge. I completed it, but last two runs I ran through the pain being injured. I’m recovering now using RICE recovery technique. From now on I will take it easier. Suggesting the same for you.

You can do it

I’m not a good runner. At the beginning of the year I could barely run 5km, I didn’t know how I will complete my planned 40 runs as it was so hard. Running 40 times was my main year goal. I did not expect that I will ran for around 50 times totaling 320 km. And it is not the end of the year yet. I also ran a Half-Marathon distance running a top Kahlenberg hill next to Vienna. If I can do it – you can!

Conclusion

I completely agree with research and studies that “for majority of people the benefits of running overweight the risks” and at the same time I voluntarily ran through the injury just to complete my challenge. Motivation is an important factor, but runners have to be careful and moderate their exercising. This is especially true if you run for the health reasons. Just try to make your runs more interesting and enjoy your life… longer.

Book Review: What If?: Serious Scientific Answers to Absurd Hypothetical Questions

September 20, 2016 Book Reviews No comments

I read this book because of the recommendation I found online. It was in one of the Bill Gate’s reading lists. Maybe this post will have same effect on you… and you will decide to pick it for your reading.

I read this book because of the recommendation I found online. It was in one of the Bill Gate’s reading lists. Maybe this post will have same effect on you… and you will decide to pick it for your reading.

“They say there are no stupid questions,” the author writes. “That’s obviously wrong. . . . But it turns out that trying to thoroughly answer a stupid question can take you to some pretty interesting places.”

Indeed, I remember we had a lot of hypothetical scientific discussions with my friends during my university days and these discussion would just go on and on ending sometime around 2AM. It was a lot of fun diving deep and exploring every possible twist. You get to have same fun when you read the book.

Well… it is actually more fun to read the book, since it is very nicely illustrated and thoroughly researched. Basically you don’t get to read rant – everything is cross checked with scientists by Munroe.

I guess this book also teaches to approach problems that require scaling, approximation, simplification, calculation, estimation. It also helps to understand physics better. And if you have some silly question that keeps you awake at nights you can always submit it on the xkcd web site.

This book is pure entertainment and joy. I can surely recommend this book to anyone generally interested in science.

Book Review: Coders At Work

April 26, 2016 Book Reviews No comments

The book “Coders At Work” is a collection of interviews of famous coders. The book takes a reader through 15 career stories. It inspires and gives insight into minds of great programmers.

The book “Coders At Work” is a collection of interviews of famous coders. The book takes a reader through 15 career stories. It inspires and gives insight into minds of great programmers.

Most of the selected coders are of older generations, so at times, it is hard or, rather, not exceptionally interesting to follow some of their career endeavours. Especially, if the whole career was around one system or one language that is “dead” today (think of COBOL, Fortran, PDP-1, etc). I don’t mind to know the history of the industry, but I’m not interested in too much of the details (you can blame me if you wish). On the other hand, it was very interesting to try to understand how these people think and how they approached problems they had. I really believe there is a lot to learn from them.

The author, Peter Seibel, starts interviews with a usual but interesting question about how the interviewees got involved in programming. Then, the author proceeds to questions that relate somehow to what interviewees have done in their careers. There are standard questions about debugging, favorite editor, opinion on some programming language, and other.

I liked answers to the question on how they hired people and what advise they can give to the young programmers.

There were a couple of questions that I didn’t like. One was “Do you consider yourself a scientist, an engineer, an artist, or a craftsman?”. I think it is a pointless question. These nouns can be interpreted in different ways and any answer would be just fine.

Throughout the book there was a discussion about the importance of computer science education and one of the questions was if interviewees have read the book “The Art Of Computer Programming”. It was kind of introduction to the last interview with Donald Knuth. I liked the interview with him, but I’m not sure if the author had to put so much emphasis on this personality.

The list of interviews is available at book’s website with short descriptions of who those people are and what they did. To be honest, before reading “Coders At Work”, I only knew about Douglas Crockford, Joshua Bloch, and Donald Knuth.

Conclusion

The book gives insight into how some of the great programmers achieved what they have achieved. It educates and inspires, though some of the stories might not be very exciting. At times, you may think that other big names would have made the book even more interesting. Nevertheless, I liked reading the book “Coders At Work”.