How to add Microsoft.Extensions.DependencyInjection to OWIN Self-Hosted WebApi

I could not find an example showing how to use Microsoft.Extensions.DependencyInjection as IoC in OWIN Self-Hosted WebApi, as a result here is this blog post.

Let’s imagine that you have WebApi that you intend to Self-Host using OWIN. This is fairly easy to do. All you will need to do is to use Microsoft.Owin.Hosting.WebApp.Start method and then have a bit of configuration on IAppBuilder (check out ServiceHost.cs and WebApiStartup.cs in the gist below).

It becomes a bit more complicated when you want to use an IoC container, as OWIN’s implementation takes care of creating Controller instances. To use another container you will need to tell the configuration to use implementation of IDependencyResolver (see WebApiStartup.cs and DefaultDependencyResolver.cs). DefaultDependencyResolver.cs is a very simple implementation of the resolver.

In case you are wondering what Microsoft.Extensions.DependencyInjection is. It is nothing more than a lightweight DI abstractions and basic implementation (github page). It is currently used in ASP.NET Core and EF Core, but nothing prevents you from using it in “normal” .NET. You can integrate it with more feature-rich IoC frameworks. At the moment it looks like Autofac has the best integration and nice documentation in place. See OWIN integration.

Rest is just usual IoC clutter. See the gist

I hope this blog post helps you with integrating Microsoft.Extensions.DependencyInjection in WebApi hosted via OWIN.

Book Review: C# in Depth

November 27, 2015 .NET, Book Reviews, C# No comments

I’ve been writing C# code for more than 10 years by now and yet had a lot to learn from the book.

I’ve been writing C# code for more than 10 years by now and yet had a lot to learn from the book.

The book is written by Jon Skeet (the guy Number One at StackOverflow) and is a purely about the C# language. It distances itself from the .NET framework and libraries supplementing the framework.

Structure of the book follows C# language versions. This isn’t particularly useful if you are trying to learn the language by reading this book after some other introductory book. But for software professionals, though, it could be a joy, since it takes you through your years of experience with the language. It tends to remind you about times when something wasn’t possible and later it became possible. Kind of nostalgia, I would say.

First chapters could be somewhat boring. I read the book cover to cover, since I didn’t want to miss on anything, but probably it isn’t the best way to read the book.

Jon is very pedant when it comes to defining anything. This, of course, is double sided: very good when you have strong knowledge and understand components of the definition, but, unfortunately, it complicates understanding. There were some places in the book which I had to read few times to completely understand. Just for instance, in a chapter about Covariance and Contravariance:

[…] the gist of the topic with respect to delegates is that if it would be valid (in a static typing sense) to call a method and use its return value everywhere that you could invoke an instance of a particular delegate type and use its return value, then that method can be used to create an instance of that delegate type.

On the other hand, I found it very important that details are not omitted. I was reading the book for the depth of it. So details and preciseness is exactly what I was expecting, even though they come with the price of slower comprehension.

You may have different background than I do, but if you are a .NET developer with some years of experience, chances are the only chapters with new and difficult information will be those that are not reflecting everyday practical usage of C# language. For example, all of us use LINQ these days, but very few of us would need to know how it works internally or need to implement their own LINQ provider. Very few of us would dig into DLR or how async/await is working.

Almost in each and every chapter there was something where I could add to my knowledge. For me the most important chapter to read was “Chapter 15. Asynchrony with async/await”, since I have very limited experience in this feature.

Conclusion

In my opinion, the best two books ever for C# programmers are “CLR via C#” and “C# in Depth”, meaning that I just added one to this list.

“C# in Depth” is a great, precise, and thorough book to complement and enrich your knowledge.

I highly recommend reading it.

What is the point of the ‘event’ keyword in C#?

October 31, 2015 .NET, C# No comments

Do you know what a delegate and event are in C#? Can you clearly explain in one-two sentences what the difference between these two is?

It might sound simple as we, .net developers, use these quite frequently. Unfortunately it isn’t that straight forward, especially when you want to be precise. Just try it now aloud.

First difficulty is when you try to differentiate between the delegate type and an instance of a delegate. Next difficulty is when you try to explain events. Your explanation may mention subscribing and unsubscribing to an event. But, hey, you can do exactly the same with a delegate instance by using exactly same “+=” and “-=”. Have you thought about this? The real difference is that with an exposed event it’s about all your external code can do. Instead with an exposed delegate instance external code can do other things like changing the whole invocation list by using “=” or invoking a delegate right away.

An event is nothing more than encapsulation convenience over delegate provided by C# language.

On a conceptual level, an event is probably much more than just some convenience, but that’s beside the point in this post.

To feel the difference all you need is to write some code. Nothing helps to understand things better than writing code and reading quality resources.

Please see below my try on understanding the difference between events and delegates. I added plenty of comments in a try to be explanatory.

Person’s sickness event triggering is responsibility of a person’s internals (stomach, in this example) and it is correct to be encapsulated in the Person’s class, otherwise external code would be able to make a person sick (I like this double-meaning).

Next step is to understand what C# compiler generates when you use the ‘event’ keyword and how else you can declare an event other than in a field-like style. I don’t describe it in this post, I’ve only read about these details, but they are quite interesting as well.

Proficiency in programming language comes with a deep understanding of the basics. I’m proving this to myself every now and then.

Load Test use case requiring plugins and synchronous runs for same data

October 5, 2015 .NET, Performance, VS No comments

Load testing is a great way of finding out if there are any performance issues with your application. If you don’t know what a load test in VS is, please read this detailed article on MSND on how to use it.

What we want to load test

I have experience with creating load tests for high load web services in entertainment industry. At the moment I’m working on internal web application in which users exclusively “check out” and “check in” big sets of data (I will call them “reports” here). In this post I want to describe one specific use case for load testing.

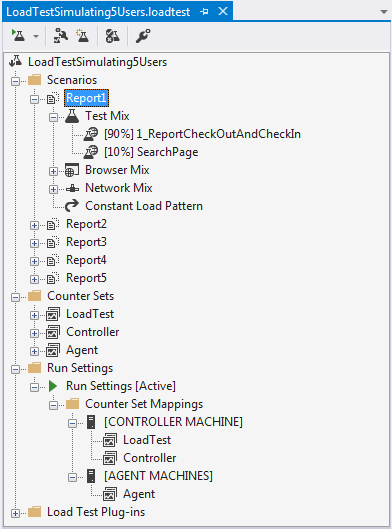

In our application only one user can work at a time with a particular report. Because of this, load tests cannot be utilizing simulation of multiple users for same data. Basically, for one particular user and report, testing has to be synchronous. User finds a report, checks it out, checks it in and so on for the same report. We want to create multiple such scenarios and run them in parallel simulating same user working on different sets of data. Can be extended to really simulate multiple users if we implement some windows impersonation as well.

There are other requirements to this performance testing. We want to quickly switch web server we are running this tests against. This also means that database will be different, therefore we cannot supply requests with hardcoded data.

My solution

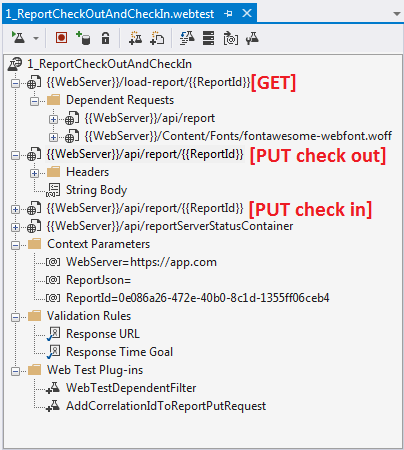

I’ve started with creating a first web performance test using web browser recording. It records all requests with the server in IE add-on. I recorded one scenario.

Depending on your application you might get a lot of requests recorded. I only left those that are most important and time consuming. One of the first things you want to do is to “Parameterize Web Servers…”. This will extract your server name into separate “Context Parameter” which you can later easily change. Also in my case, most of the requests are report specific, so I added another parameter called “ReportId” and then used “Find and Replace in Request…” to replace concrete id with “{{ReportId}}” parameter.

Recorder obviously records everything “as is” by embedding concrete report’s json into “String Body”. I want to avoid this by extracting “ReportJson” into a parameter and then using it in PUT requests. You can do this using “Add Extraction Rule…” on GET request and specify that you want to save response into a parameter. Now you can use “{{ReportJson}}” in String Body of put requests. As simple as that.

Unfortunately, not everything is that straight forward. When our application does a PUT request it generates a correlationId that is later used to update client on the processing progress. To do custom actions you can write plugins. I’ve added two of them. One is basically to skip some of the dependant requests (you can use similar to skip requests to external systems referenced from you page).

The other plug-in I’ve implemented is to take parameter “ReportJson” and update it with new generated correlationId. Here it is:

using System;

using Microsoft.VisualStudio.TestTools.WebTesting;

namespace YourAppLoadTesting

{

public class AddCorrelationIdToReportPutRequest: WebTestPlugin

{

public string ApplyToRequestsThatContain { get; set; }

public string BodyStringParam { get; set; }

public override void PreRequest(object sender, PreRequestEventArgs e)

{

if (e.Request.Url.Contains(ApplyToRequestsThatContain) && e.Request.Method == "PUT")

{

var requestBody = new StringHttpBody();

requestBody.ContentType = "application/json; charset=utf-8";

requestBody.InsertByteOrderMark = false;

requestBody.BodyString = e.WebTest.Context[BodyStringParam].ToString()

.Replace("\"correlationId\":null",

string.Format("\"correlationId\":\"{0}\\\\0\"", Guid.NewGuid()));

e.Request.Body = requestBody;

}

base.PreRequest(sender, e);

}

}

}

In pluging configuration I set ApplyToRequestsThatContains to “api/report” and also BodyStringParam to “ReportJson”.

To finish with my load test all I had to do is to copy-paste few of this webtests and change ReportIds. After that I added webtests to a load test as separate scenarios making sure that every scenario has constant load of only 1 user. This makes sure that each scenario runs synchronous operations on individual reports.

I was actually very surprised how flexible and extensive load tests in VS are. You can even generate code from your webtest to have complete control over your requests. At least I would recommend you to generate code at least once to understand what it does under the hood.

I hope this helps someone.

Edge.js integration into C# project to run some JavaScript on the server

January 23, 2015 .NET, JavaScript 1 comment

A bit of a story on why we are moving from Jurassic to Edge.js

In a project I’m working on we have plenty of shared logic that we want to execute both on client and server. We decided that we want it to be written in JavaScript. As our backend is .NET we used Jurassic library to compile JavaScript code on the server and then to execute it whenever we needed it. We used Jurassic library for quite some time and it worked fine. It is a bit slow on doing initial compile of all of our JS code, so our startup time lagged (+30 sec or so). Execution time also wished to be better. But recently we started to get crashes that seemed to be from the library itself, but it is a real pain to find out what was the problem since library doesn’t provide JS stack trace.

How to integrate Edge.js in C# project

If everything goes smoothly all you need to do is to reference EdgeJs.dll through NuGet and execute your node script using Edge.Func. But life is not that easy.

I would recommend to create minimalistic project with basic things that are used in the project you are going to integrate Edge into. This will allow you to save time on testing how integration works. You can start with hello world available on Edge.js page. I’ve also created simple project with few things I wanted to test out, mainly loading of modules, passing-in and getting results.

Here below are three files from my sample project. It should be easy to follow the code plus there are some comments just below the code.

In Program.cs you can see how easy it is to invoke Edge and pass in a dynamic object. Getting result back is also super easy. If you are working with large objects you might want to convert the object to JSON and then pass it into your JavaScript. This is what we do using Newtonsoft.Json.

Edge.Func has to accept a function of a specific signature with a callback being called. Alternatively you can have a module that itself is a function and then load it. Exactly what I did with module edgeEntryPoint.js. To do something useful, I’m trying to dynamically find an object ‘className’ and call a function ‘functionName’ on it plus I send some other parameters. This is somewhat similar to what we want to achieve in our project.

calc2D.js is a module with logic that I’m trying to call. There is one interesting thing about it. It is the way how it exposes itself to the world. It checks if there is ‘window’ and assigns itself to it or otherwise assigns itself to ‘GLOBAL’ so this module with work fine both in browser and in Node.js.

Few things that could go wrong

Signing

It is very likely that you are signing your assemblies therefore you will get compile error telling you:

Assembly generation failed — Referenced assembly ‘Edge’ does not have a strong name.

Don’t panic. You can still sign Edge.dll. There are few ways of doing it. You can even rebuild Edge source code with your key.snk or what I find more easier is to disassemble, rebuild and sign from VS console using 2 commands:

ildasm /all /out=Edge.il Edge.dll

ilasm /dll /key=key.snk Edge.il

window is not defined

In case if you want to run same JavaScript both on client and on server you might run into issues of namespacing and global variables. Above I’ve already demonstrated how we exposed our own namespace by checking if ‘window’ is defined. If you are trying to load a lot of modules you might want to assign them to global variables before your callback function returns. For example:

moment = require(‘../../moment.js’);

If you still get error ‘window is not defined’ it could be that some internal logic is relying on global ‘window’ being defined somewhere. In order to fix it you can just have this kind of a hack in front of your entry function:

GLOBAL.window = GLOBAL;

Edge.Func hangs

There is already issue reported on this on GitHub edge#215, which I believe is similar to what we are experiencing (if not exactly same).

In our case we want to run JavaScript from .NET that runs in IIS. I created wrapper around Edge that keeps compiled function, and then Invoking is done whenever we need it. One interesting thing started to happen when I had web site pointing to bin folder. After rebuilding the app initialization would hang on Edge.Func. If I kill the w3wp.exe process and start the app from scratch all works just fine. Unfortunately I was not able to reproduce this with console application. I suspect that this has something to do with how IIS threads run and possibly locks files, but still if I locked node.dll, double_edge.js and edge.node files for console app it was not reproducible.

Issue was solved by ensuring that IIS is stopped before building the web project. This can be achieved by using commands ‘iisreset /stop’ and ‘iisreset /start’ in BeforeBuild and AfterBuild tags of your csproj file.

I don’t think that this is the best way to solve this or that running Edge.js in IIS is very reliable, so I spent a bit of time reading source code of Edge.js and debugging it. And to be honest I don’t quite understand all the things around invoking native code to make all this magic happen. Because of this I’m not 100% sure that entire solution with Edge.js will prove itself to be a decent one.

All in all Edge.js is a really great tool. Not so long ago things it does would be unbelievably difficult to achieve.

I hope this post is of some help to you.

How to consume WCF service in .NET

May 27, 2013 .NET, WCF No comments

What? I must be kidding. This is not blog for kids trying to play with .NET. Every professional .NET developer knows how to consume WCF. Don’t they? There is nothing more easier than that.

Well, not that long ago I realized that the way I like to consume WCF services is not 100% correct.

What I like to do is use of “using”:

using (var client = new SomeServiceClient()) { var response = client.SomeServiceOperation(request); //return or do something }

While this looks nice, here is thing which even kids won’t like: Dispose method for the client is not really implemented correctly by Microsoft! It could throw an exception if there is network problem therefore masking other exceptions that could have happened in between. You can understand the issue better if you have a look at WCF samples (WF_WCF_SamplesWCFBasicClientUsingUsing).

MS proposes their own solution (read it here):

var client = new SomeServiceClient(); try { var response = client.SomeServiceOperation(request); // do something client.Close(); } catch (Exception) { client.Abort(); throw; }

While this is correct way it is too much code, especially if you put catch blocks for Communication and Timeout exceptions as recommended by MS. Guys over internet propose other solutions, like wrapping the call or extension methods.

Here is my solution, which is nothing new, but just slightly modified version of best proposed answer on SO:

Elegant example of usage with return statements:

return Service<ISomeServiceChannel>.Use(client => { return client.SomeServiceOperation(request); });

And the solution itself:

public static class Service<TChannel> { public static ChannelFactory<TChannel> ChannelFactory = new ChannelFactory<TChannel>("*"); public static TReturn Use<TReturn>(Func<TChannel, TReturn> codeBlock) { var proxy = (IClientChannel)ChannelFactory.CreateChannel(); var success = false; try { var result = codeBlock((TChannel)proxy); proxy.Close(); success = true; return result; } finally { if (!success) { proxy.Abort(); } } } }

And some bitterness for the end. It doesn’t look like Microsoft is in a hurry to fix Dispose while they should accordingly to their own guidelines. But even knowing this I still like “using” and will probably be stick to it for smaller things. You see, my problem is that I have never-ever experienced inconveniences or issues because of this.

Is it same for you or do you have a story to share with me/others in your comment? :)

GMT vs. UTC and Time Zones in .NET

May 26, 2013 .NET, C# No comments

Now bit more for not so ordinal people

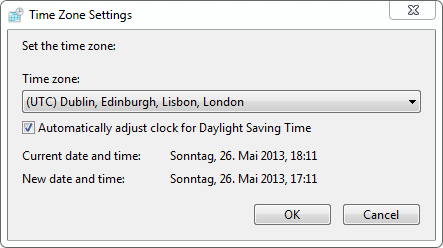

GMT does not adjust for daylight savings time. You can hear it from the horse’s mouth on this web site. Add this line of code to see the source of the problem:Console.WriteLine(TimeZoneInfo.FindSystemTimeZoneById("GMT Standard Time").SupportsDaylightSavingTime);

Output: True.This is not a .NET problem, it is Windows messing up. The registry key that TimeZoneInfo uses is HKLMSOFTWAREMicrosoftWindows NTCurrentVersionTime ZonesGMT Standard TimeYou’d better stick with UTC.

Conversion itself can be done utilizing class TimeZoneInfo (same link again):

And please be careful. You would need some exception handling and be ready for not-existing or ambiguous time. For example, see how this:

would throw an exception. Reason is that there is no such time as 1:30AM on 31 March 2013 in London.

How to work with Time, Dates and Time Zones in .NET

-

Always persist date and time in UTC, otherwise save offset, but never-ever local time

-

Use DateTimeOffset – it is DateTime v2, otherwise at least make sure to avoid DateTime pitfalls

-

Be explicit about TimeZone used, in APIs I would also call date&time fields with suffix “Utc” (ugly?)

-

Convert to local time just before displaying

-

… and check for best practices over internet

If it is Coordinated Universal Time, why is the acronym UTC and not CUT? When the English and the French were getting together to work out the notation, the french wanted TUC, for Temps Universel Coordonné. UTC was a compromise: it fit equally badly for each language. :)

Consuming REST services in .NET

December 17, 2012 .NET, REST, WCF 5 comments

There are few different ways of consuming REST services in .NET

- Plain .NET HTTP request

- WCF mechanisms

- HttpClient

- Other libraries

In this post I would like to demonstrate how you can consume REST in C#.

Before we proceed to examples, let’s choose some very simple public REST API to consume. I stopped at this one: http://timezonedb.com/api. It is very simple service providing current local time for requested time zone. After registering you will get some key to access api. In this blog post we will use “YOUR_API_KEY”.

Here is sample response of the service we chose to consume:

<?xml version="1.0" encoding="UTF-8"?> <result> <status>OK</status> <message></message> <countryCode>AU</countryCode> <zoneName>Australia/Melbourne</zoneName> <abbreviation>EST</abbreviation> <gmtOffset>39600</gmtOffset> <dst>1</dst> <timestamp>1321217345</timestamp> </result>

Whichever approach we take, we would need some entities corresponding to the API’s response.

For XML response use cases I prepared following entity:

[XmlRoot("result")] public class TimezoneDbInfo { [XmlElement("status")] public string Status { get; set; } [XmlElement("message")] public string Message { get; set; } [XmlElement("countryCode")] public string CountryCode { get; set; } [XmlElement("zoneName")] public string ZoneName { get; set; } [XmlElement("abbreviation")] public string Abbreviation { get; set; } [XmlElement("gmtOffset")] public int GmtOffset { get; set; } [XmlElement("dst")] public int Dst { get; set; } [XmlElement("timestamp")] public int Timestamp { get; set; } }

For JSON use cases we will use the following:

[DataContract(Name = "result")] public class TimezoneDbInfoJson { [DataMember(Name = "status")] public string Status { get; set; } [DataMember(Name = "message")] public string Message { get; set; } [DataMember(Name = "countryCode")] public string CountryCode { get; set; } [DataMember(Name = "zoneName")] public string ZoneName { get; set; } [DataMember(Name = "abbreviation")] public string Abbreviation { get; set; } [DataMember(Name = "gmtOffset")] public int GmtOffset { get; set; } [DataMember(Name = "dst")] public int Dst { get; set; } [DataMember(Name = "timestamp")] public int Timestamp { get; set; } }

So let’s start!

Option 1: Plain .NET HTTP request

Here is how you can consume REST using WebRequest:

var urlTemplate = "http://api.timezonedb.com/?zone={0}&key={1}"; var url = string.Format(urlTemplate, "Europe/Kiev", "YOUR_API_KEY"); var request = WebRequest.Create(url); var response = request.GetResponse(); var s = new XmlSerializer(typeof(TimezoneDbInfo)); var timezoneDbInfo = (TimezoneDbInfo)s.Deserialize(response.GetResponseStream());

And if you would need to consume same data but in JSON format, you can come up with following code:

var urlTemplate = "http://api.timezonedb.com/?zone={0}&key={1}&format=json"; var url = string.Format(urlTemplate, "Europe/Kiev", "YOUR_API_KEY"); var webClient = new WebClient(); byte[] downloadedRawResponse = webClient.DownloadData(url); var stream = new MemoryStream(downloadedRawResponse); var s = new DataContractJsonSerializer(typeof(TimezoneDbInfoJson)); var timezoneDbInfo = (TimezoneDbInfoJson)s.ReadObject(stream);

Either way, using WebRequest or WebClient allows for some lower level of flexibility like controlling timeouts, headers and many other things. For example:

Some of the disadvantages of above approach are manual building of a request URL and manual deserialization of a response. Many of you might not like the above code, thus let’s switch to WCF approach, which looks more elegant and robust at the first glance.

Option 2: WCF mechanisms

Not a secret that we can create REST services with WCF. Effectively this means that we can also create client for other REST services. For this all we need is just to create proxy as if it was our own service. Since, we cannot get wsdl with metadata for our service we have to create proxies manually.

To start we put some WCF configuration in place:

<system.serviceModel> <bindings> <webHttpBinding> <binding name="webHttpBindingCustom" receiveTimeout="00:01:01" sendTimeout="00:01:01"> <security mode="None"/> </binding> </webHttpBinding> </bindings> <client> <endpoint address="http://api.timezonedb.com/" binding="webHttpBinding" bindingConfiguration="webHttpBindingCustom" behaviorConfiguration="tzBehavior" contract="ConsumingRestInNet.ITimezoneDb" name="TimeZoneDbREST" /> </client> <behaviors> <endpointBehaviors> <behavior name="tzBehavior"> <webHttp/> </behavior> </endpointBehaviors> </behaviors> </system.serviceModel>

We already have some configuration flexibility which comes with WCF. Now, let’s define service contract:

[ServiceContract] public interface ITimezoneDb { [OperationContract] [XmlSerializerFormat] [WebGet(UriTemplate = "?zone={zone}&key={key}")] TimezoneDbInfo GetTimezoneInfo(string zone, string key); [OperationContract] [WebGet(UriTemplate = "?zone={zone}&key={key}&format=json")] TimezoneDbInfoJson GetTimezoneInfoJson(string zone, string key); }

(In above method we can avoid having 2 methods by orchestrating our TimezoneDbInfo entity with DataContract in addition to XmlRoot and by having 3rd param).

XML consumption:

var channelFactory = new ChannelFactory<ITimezoneDb>("TimeZoneDbREST"); var channel = channelFactory.CreateChannel(); var timezoneDbInfo = channel.GetTimezoneInfo("Europe/Kiev", "YOUR_API_KEY");

JSON consumption:

var channelFactory = new ChannelFactory<ITimezoneDb>("TimeZoneDbREST"); var channel = channelFactory.CreateChannel(); var timezoneDbInfo = channel.GetTimezoneInfoJson("Europe/Kiev", "YOUR_API_KEY");

You can also go further and implement your own Client class:

public class TimezoneDbClient : ClientBase<ITimezoneDb>, ITimezoneDb { public TimezoneDbClient(string endpointConfigurationName) : base(endpointConfigurationName) { } public TimezoneDbInfo GetTimezoneInfo(string zone, string key) { return base.Channel.GetTimezoneInfo(zone, key); } public TimezoneDbInfoJson GetTimezoneInfoJson(string zone, string key) { return base.Channel.GetTimezoneInfoJson(zone, key); } }

Now consumptions will look like piece of cake:

var client = new TimezoneDbClient("TimeZoneDbREST"); var timezoneDbInfo = client.GetTimezoneInfoJson("Europe/Kiev", "YOUR_API_KEY");

Well, of course! All nasty code went to other classes and the config.

Option 3: HttpClient

It would be a perfect ending for a blog post. But it is not. On one hand using WCF provides us with great abstraction over service, but on the other hand it is just overhead for doing simple things. Also some people think that coupling generated by WCF defeats the point of REST.

Here is extract of a great answer on SO about what exactly is RESTful programming:

Really, what it’s about is using the true potential of HTTP. The protocol is oriented around verbs and resources. The two verbs in mainstream usage is GET and POST, which I think everyone will recognize. However, the HTTP standard defines several other such as PUT and DELETE. These verbs are then applied to resources.

To leverage all of this potential Microsoft has built System.Net.Http.HttpClient.

HttpClient is inside of namespace System.Net.Http but nuget package is called Microsoft.Net.Http. So don’t get confused. If you add the package it will simply reference System.Net.Http in your project. Nuget page says:

“This package provides a programming interface for modern HTTP applications. This package includes HttpClient for sending requests over HTTP, as well as HttpRequestMessage and HttpResponseMessage for processing HTTP messages.”

Here is how we can use HttpClient:

var urlTemplate = "http://api.timezonedb.com/?zone={0}&key={1}&format=json"; var url = string.Format(urlTemplate, "Europe/Kiev", "YOUR_API_KEY"); var httpClient = new HttpClient(); var streamTask = httpClient.GetStreamAsync(url); var s = new DataContractJsonSerializer(typeof(TimezoneDbInfoJson)); var timezoneDbInfo = (TimezoneDbInfoJson)s.ReadObject(streamTask.Result);

Code above looks pretty much the same as when we used plain WebRequest. But HttpClient is much more advanced and adopted for consuming REST services. For example, it has methods: GetAsync and PostAsync. Reading and sending custom headers is much more simplified. Here is great post with many examples of using HttpClient: http://www.bizcoder.com/index.php/2012/01/09/httpclient-it-lives-and-it-is-glorious/

Option 4: Other libraries

So, are there any other possibilities to consume REST in .NET?

Yes. There are dozen of libraries out there implemented exactly for that, though I’m sure that a lot of them cannot be considered as good candidates.

[Edit 2015-Sep-2016] People often mention Restsharp and Hammock libraries.

Links

- How to consume REST in .NET: http://stackoverflow.com/questions/8883656/how-to-consume-a-restful-service-in-net

- Examples of using HttpClient: http://www.bizcoder.com/index.php/2012/01/09/httpclient-it-lives-and-it-is-glorious/

- MSDN blog post on consuming REST with WCF: http://blogs.msdn.com/b/pedram/archive/2008/04/21/how-to-consume-rest-services-with-wcf.aspx

- TimeZoneDb API page: http://timezonedb.com/api

- Have you noticed there response has integer for time? http://en.wikipedia.org/wiki/Unix_timestamp

- Nuget page for Microsoft.Net.Http: http://nuget.org/packages/Microsoft.Net.Http

Hope this post comes handy for you!

Application Fabric Cache – an easy, but solid start

I would like to share some experiences of working with Microsoft AppFabric Cache for Windows Server.

AppFabricCache is distributed cache solution from Microsoft. It has very simple API and would take you 10-20 minutes to start playing with. As I worked with it for about one month I would say that product itself is very good, but probably not enough mature. There are couple of common problems and I would like to share those. But before let’s get started!

If distributed cache concept is something new for you, don’t be scary. It is quite simple. For example, you’ve got some data in your database, and you have web service on top of it which is frequently accessed. Under high load you would decide to run many web instances of your project to scale up. But at some point of time database will become your bottleneck, so as solution you will add caching mechanism on the back-end of those services. You would want same cached objects to be available on each of the web instances for that you might want to copy them to different servers for short latency & high availability. So that’s it, you came up with distributed cache solution. Instead of writing your own you can leverage one of the existing.

Install

You can easily download AppFabricCache from here. Or install it with Web Platform Installer.

Installation process is straight forward. If you installing it to just try, I wouldn’t even go for SQL server provider, but rather use XML provider and choose some local shared folder for it. (Provider is underlying persistent storage as far as I understand it.)

After installation you should get additional PowerShell console called “Caching Administration Windows PowerShell”.

So you can start your cache using: “Start-CacheCluster” command.

Alternatively you can install AppFabric Caching Amin Tool from CodePlex, which would allow you easily do lot of things though the UI. It will show PowerShell output, so you can learn commands from there as well.

Usually you would want to create named cache. I created NamedCacheForBlog, as can be seen above.

Simple Application

Let’s now create simple application. You would need to add couple of references:

Add some configuration to your app/web.config

<section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core" allowLocation="true" allowDefinition="Everywhere"/> <!-- and then somewhere in configuration... --> <dataCacheClient requestTimeout="5000" channelOpenTimeout="10000" maxConnectionsToServer="20"> <localCache isEnabled="true" sync="TimeoutBased" ttlValue="300" objectCount="10000"/> <hosts> <!--Local app fabric cache--> <host name="localhost" cachePort="22233"/> <!-- In real world it could be something like this: <host name="service1" cachePort="22233"/> <host name="service2" cachePort="22233"/> <host name="service3" cachePort="22233"/> --> </hosts> <transportProperties connectionBufferSize="131072" maxBufferPoolSize="268435456" maxBufferSize="134217728" maxOutputDelay="2" channelInitializationTimeout="60000" receiveTimeout="600000"/> </dataCacheClient>

Note, that above configuration is not the minimal one, but rather more realistic and sensible. If you are about to use AppFabric Cache in production I definitely recommend you to read this MSDN page carefully.

Now you need to get DataCache object and use it. Minimalistic, but wrong, way of doing it would be:

public DataCache GetDataCacheMinimalistic() { var factory = new DataCacheFactory(); return factory.GetCache("NamedCacheForBlog"); }

Above code would read configuration from config and return you DataCache object.

Using DataCache is extremely easy:

object blogPostGoesToCache; string blogPostId; dataCache.Add(blogPostId, blogPostGoesToCache); var blogPostFromCache = dataCache.Get(blogPostId); object updatedBlogPost; dataCache.Put(blogPostId, updatedBlogPost);

DataCache Wrapper/Utility

In real world you would probably write some wrapper over DataCache or create some Utility class. There are couple of reasons for this. First of all DataCacheFactory instance creation is very expensive, so it is better to keep one. Another obvious reason is much more flexibility over what you can do in case of failures and in general. And this is very important. Turns out that AppFabricCache is not extremely stable and can be easily impacted. One of the workarounds is to write some “re-try” mechanism, so if your wrapping method fails you retry (immediately or after X ms).

Here is how I would write initialization code:

private DataCacheFactory _dataCacheFactory; private DataCache _dataCache; private DataCache DataCache { get { if (_dataCache == null) { InitDataCache(); } return _dataCache; } set { _dataCache = value; } } private bool InitDataCache() { try { // We try to avoid creating many DataCacheFactory-ies if (_dataCacheFactory == null) { // Disable tracing to avoid informational/verbose messages DataCacheClientLogManager.ChangeLogLevel(TraceLevel.Off); // Use configuration from the application configuration file _dataCacheFactory = new DataCacheFactory(); } DataCache = _dataCacheFactory.GetCache("NamedCacheForBlog"); return true; } catch (DataCacheException) { _dataCache = null; throw; } }

DataCache property is not exposed, instead it is used in wrapping methods:

public void Put(string key, object value, TimeSpan ttl) { try { DataCache.Put(key, value, ttl); } catch (DataCacheException ex) { ReTryDataCacheOperation(() => DataCache.Put(key, value, ttl), ex); } }

ReTryDataCacheOperation performs retry logic I mentioned before:

private object ReTryDataCacheOperation(Func<object> dataCacheOperation, DataCacheException prevException) { try { // We add retry, as it may happen, // that AppFabric cache is temporary unavailable: // See: http://msdn.microsoft.com/en-us/library/ff637716.aspx // Maybe adding more checks like: prevException.ErrorCode == DataCacheErrorCode.RetryLater // This ensures that once we access prop DataCache, new client will be generated _dataCache = null; Thread.Sleep(100); var result = dataCacheOperation.Invoke(); //We can add some logging here, notifying that retry succeeded return result; } catch (DataCacheException) { _dataCache = null; throw; } }

You can go further and improve retry logic to allow for many retries and different intervals between retries and then put all that stuff into configuration.

RetryLater

So, why the hell all this retry logic is needed?

Well, when you open MSDN page for AppFabric Common Exceptions be sure RetryLater is the most common one. To know what exactly happened you need to verify ErrorCode.

So far I’ve see this sub-errors of the RetryLater:

There was a contention on the store. – This one is quite frequent one. Could happen when someone is playing some concurrent mess with cache. Problem is that any client can affect the whole cluster.

The connection was terminated, possibly due to server or network problems or serialized Object size is greater than MaxBufferSize on server. Result of the request is unknown. – This usually has nothing to do with object size. Even if configuration is correct and you save small objects you can still get this error. Retry mechanism is good for this one.

One or more specified cache servers are unavailable, which could be caused by busy network or servers. – Have no idea how frequent this one could be, but it can happen.

No specific SubStatus. – Amazing one!

Conclusion

AppFabricCache is very nice distributed cache solution from Microsoft. It has a lot of features. Of course not described here, as you can read it elsewhere, say here. But to be able to go live with it you should be ready for AppFabricCache not being extremely stable & reliable, so you better put some retry mechanisms in place.

To be honest if I was one to make decision if to use this dcache, I would go for another one. But who knows, maybe other are not much better… I’ve never tried other distributed caches.

Links

Thank you, and hope this is of some help.

Autofac: resolve dependencies later in code & pass your parameters

March 22, 2012 .NET, Autofac, C#, IoC 3 comments

builder

.Register(b => new F())

.As<IF>();

builder

.Register(b => new E())

.As<IE>();

builder

.Register(b => new D1())

.Named<ID>(SomeD1Name);

builder

.Register(b => new D2())

.Named<ID>(SomeD2Name);

builder

.Register((b,prms) => new C(b.Resolve<IE>(), prms.TypedAs<IF>()))

.Named<IC>(CName);

builder

.Register((b,prms) => new B(b.Resolve<IF>(), b.ResolveNamed<IC>(CName, prms)))

.As<IB>();

builder

.Register(b => new A(b.Resolve<IE>(),

b.ResolveNamed<ID>(SomeD1Name),

b.ResolveNamed<ID>(SomeD2Name)))

.As<IA>(AName);

builder

.Register(b => new Service(b.Resolve<IComponentContext>(),

b.Resolve<IA>(), b.Resolve<IB>()))

.As<IService>();And that’s it.