February 12, 2024 Leadership, Opinion No comments

Quick thoughts on Conflict Resolution Styles

Hopefully a short post. As always opinions are mine.

I always figured dealing with conflict was something you just…did. You know, no need for fancy theories or structured approaches. Recently I was asked what my conflict resolution style is and how it matches to style of other people. So I did a bit of research, I mean, in a lazy way:

Andriy: > Give brief list of conflict resolution styles as bullet points:

Gemini: > Absolutely! Here’s a brief list of the most common conflict resolution styles:

- Accommodating: Prioritizing the needs of others over your own and seeking to maintain harmony.

- Avoiding: Sidestepping or postponing dealing with the conflict.

- Compromising: Finding a middle ground; each party gives up something to find a solution.

- Competing: Focused on winning and advancing your own needs above all else.

- Collaborating: Working together to find a solution that fully satisfies everyone’s needs as much as possible.

Andriy: > What’s the origin of these conflict resolution styles?

Gemini: > The primary origin of the framework for these conflict resolution styles comes from the work of Kenneth Thomas and Ralph Kilmann in the 1970s.

Ok. That kind of makes sense and I have a hunch I have read about those at some point in my life. Now I have an exercise for you, which I’m doing myself along the way as well. I promise it will get more interesting:

- Determine your own conflict resolution style by either taking a quiz online or by just thinking through those. I’m kind of combination of Collaborating, Compromizing, and a bit of Competing with complete intolerance to Avoiding style.

- Now try to determine the styles of people you have to deal with, say collegues at work or people in your personal life. For myself, I created kind of a map of few of the stakeholders at work.

- Now try to think of pros and cons of each of the styles and how they might impact you, other person, or what would be the best situation to use a style. Yeah, internet has lots of these, and I generated some stuff below, but it is more interesting to think about this while having context of yourself or others you know. Think of maybe 2-3 people, not to overwhelm yourself.

- Now the fun part: mix and match yourself to these people and recall how your interactions where with them. For myself I found that:

- We spend to much time in “analysis-paralysis” when another person is collaborating as much as I am.

- There is a lot more compromizing on my side when dealing with a competing or avoiding person, which is probably detremental for me and leaves a bit of resentment.

- Things get solved very quickly with an accomodating person, but then do I lose some of my credit with them just because they haven’t spoken?

- … and many more…

Andriy: > Add one pro, one con, and one recommendation under each conflict resolution style bullet point.

Gemini: > Absolutely! Here’s the list expanded with a pro, con, and recommendation for each style:

- Accommodating:

- Pro: Maintains relationships and reduces tension.

- Con: Can lead to neglecting your own needs.

- Recommendation: Use strategically in low-stakes situations or when preserving harmony is crucial.

- Avoiding:

- Pro: Allows time to cool down and gather your thoughts.

- Con: Doesn’t actually resolve the conflict, which can build resentment.

- Recommendation: Employ only as a temporary measure, ensuring you return to address the issue later.

- Compromising:

- Pro: Can lead to finding a quick and somewhat fair solution.

- Con: May limit creativity and not fully address everyone’s concerns.

- Recommendation: Best when finding a temporary fix or when issues are of moderate importance.

- Competing:

- Pro: Can be useful when immediate, decisive action is needed.

- Con: Damages relationships and may not lead to the best long-term solution.

- Recommendation: Reserve for emergencies or when power dynamics are heavily imbalanced.

- Collaborating:

- Pro: Leads to solutions that genuinely satisfy everyone involved.

- Con: Can be time-consuming and requires everyone to be fully invested.

- Recommendation: Aim for this style whenever possible, especially for high-stakes conflicts or building deep trust.

This self-invented exercise helped me realize I need to be more mindful of the situation when handling conflict. I should explore styles that feel less natural, like strategically using avoidance when emotions are high – as long as I remember to revisit the issue later or that occasionally I need to compromize less in favor of achieveing better quality.

What are your thoughts?

On Mentorship

October 4, 2023 Leadership, Opinion, Personal 2 comments

Ever watched a martial arts movie? There is always a Sensei behind the main character – usually a much more experienced, older, and wiser person willing to share their knowledge. Career, life, or software engineering isn’t exactly the same (maybe metaphorically?), but in the very same way, it is always a great idea to be inspired by and learn things from someone who has already been through the journey you are embarking on.

I don’t think I can give justice to the topic of mentorship holistically (go search the internet for that or ask Bard to generate bullet points for you), though I can share my own experiences. In part this is what mentorship is all about, see if there is anything for you below:

In my early career back in Ukraine I was lucky to get direct exposure to our clients from the USA as this helped with my English but I was also lucky to work with talented and highly energetic technical leader and a Microsoft MVP (most-valuable-professional). He was a lot of inspiration for me and probably was the reason for starting this blog and effectively jump-starting my career (tech blogging was very popular back in ~2010). Moving through my career I met many engineers who were highly skilled, had diverse technical backgrounds (think software for Ferrary F1, or for British military, or high frequency trading, or nuclear energy, etc). So I tried to challenge myself to learn from them. Very specifically, one of the annual goals I had in 2016 was “Learn 12 simple skills from other people. To achieve this goal I will first identify 12 people from the community and those surrounding me and chose some characteristic I admire.” I might not have been exceptionally successful in achieving that particular goal but at least I learned to appreciate that others know cool things I don’t. Once I moved to work for big tech (Amazon & now Google) I normally tried to maintain “official” mentorship relationship(s) via internal mentorship programs/platforms.

My experience with “official” mentors so far has been mixed. As always, it all depends on the person and how two of us connect, but in all of the cases mentors have always provided something of a value to me. It was never-ever time wasted. At the very least, a mentor will help you get an outsider perspective on you situation and answer your questions from different perspective other than your manager might. There is no guarantee that you will agree to what they say or that their recommendations will be ideally applicable to you but it is your job to work through those and figure out the best you can get out additional point of view.

I worked with few of my mentors on my promotions. It is always great to get a mentor one or two levels higher as they have a lot more understanding of what it takes to get promoted to the next level and they might actually be part of promo review process for that level. In fact, I feel like I got a lot of information from one of my mentors I otherwise wouldn’t be able to get from my manager.

Other than promotions, the other area I worked on with my mentors was understanding of my next career moves. For instance, one good advise I got was to always get most out of any situation before making any radical decisions. At the same time, I also got “never moving fish is a dead fish” and other types of advise, all of which have had their impact on me.

Third area of engagmeent with my mentors was in building vision/strategy and presenting it to leadership. A very specific advise (and, maybe, a bit weird) was to actually visualize that I’m that leader I’m going to present the strategy to and try to understand what would that leader pay attention to when listening to the presentation. I was actually asked to role play during mentorship discussion, which felt really weird, but I wouldn’t have tried this if I didn’t have this “weird” mentor.

I know that I’ve been a bit of an inspiration for some engineers in the past and this realization was a great source of energy for myself as well as a motivation to self-improve further. Regretfully I’ve lost a lot of drive to be an example or an inspiration to others. I’ve also find it more difficult to be inspired by someone. Don’t take me wrong, not that there are not enough great people around (if anything, my collegues are one of the best and truly remarkable people), it is probably just me getting older and grumpy. In a way I miss those times but on a higher note writing this blog post helped me recall good times I had and motivate myself to be a more active individual in this regard. I currently have a mentee and keep in touch with few former collegues which whom I exchange career advise.

Looking back at my past mentorship relationships I can confidently say that they helped me. Go ahead and get yourself a mentor and if you have the opportunity, don’t hesitate and take a moment to teach someone something you know, chances are you might benefit in the process as well.

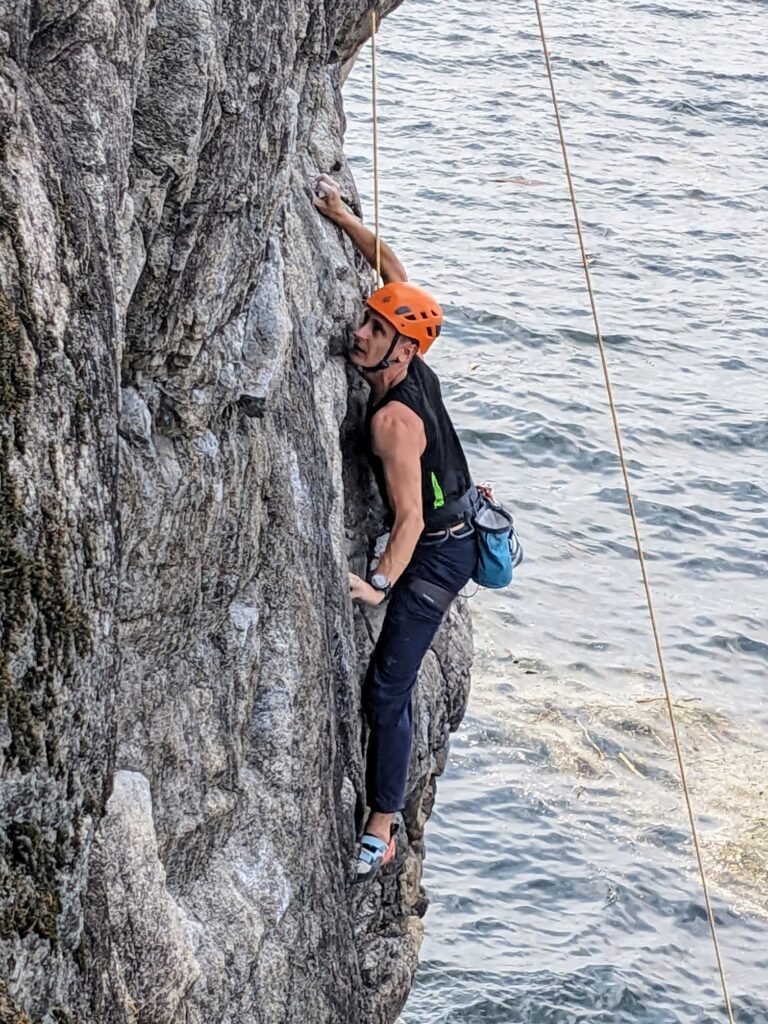

Rock Climbing as a way to cope

August 31, 2023 Personal No comments

Sport, but especially rock climbing, turned out to be my way to cope with things that are or were happening: war in my home country, COVID, work stress, personal experiences. Climbing showed me how much I have missed out growing up and but also that there is still hope and room to have a healthy life all despite approaching midlife milestone (and its crisis I guess).

Last few years I’ve been doing more sports than ever before in my life and my body is probably in the best physical shape than ever before and is getting better. In high school, university and early in my career I saw nothing other than my computer or studies. I didn’t take any care of myself nor did I consider it something I had to do. I didn’t give this enough consideration. I started to build my self-esteem via achievements at work and neglected many of other aspects of life, which I regret now. Since then some things have changed. In 2016 I started to run, not much but over time it became quite regular. In 2021 I haven’t missed a single day to a sports activity. As I write this, my usual week includes 1-3 days of rock climbing, 1-2 kickboxing days, 1-2 strength trainings, 1-2 runs and occassional one-off activity (hiking, biking, skiiing, etc…) and I would do more if I could get more days in a week.

Occassionally I get asked why I like climbing and I don’t normally have a good anwer to shoot back right away. It kind of does seem somewhat silly for a fully grown adult to go and try to get up the wall. It may seem absolutely purposeless to climb a steep rock only to get back down later on. But if you think what it takes you could see that one has to go through a process of challanging themselves. They have to fight fear of falling, they have to apply strength up to their limit, and they have to be aware of their body (much like in meditation). So, in a way, climbing can be seen as a spiritual experience. I think over time climbing does more to your mind than to you body.

Climbing is quite social activity. You would normally see a bunch of people cheering someone from their group trying to send a bouldering problem. If you are new, at the beginning you might feel intimidated by all the strong people climbing crazy stuff but you quickly realize that the environment is very inclusive. I don’t know the exact reason, probably, because we, climbers, realize that everyone came here to push themselves over own comfort zone and we want to celebrate as if it was part of our own achievement. Or, maybe, it is our ancestory genes from back when we climbed trees.

I personally maintained a strong friendship over climbing, have met multiple new people, and used climbing as means to invite non-climber friends to hang-out with, and, maybe, learned something new about life from these people. Today I’m going to drive a couple hours to climb with 5 strangers I have never met before.

I’m looking forward to what climbing holds for me. I envision sending harder problems, meeting more of new people, being in places hardly reachable by many, but most of all building self-esteem, chasing that sense of achievement, and feeling of being alive.

Everyone finds their own way, what’s your way?

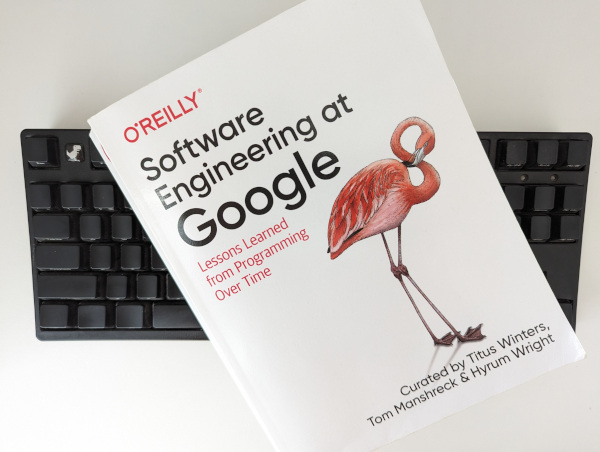

Book Review: Software Engineering at Google

July 30, 2023 Book Reviews No comments

Disclaimer: I works for Google. Opinions expressed in this post are my own and do not represent opinions of my employer.

NOTE: Most of this was written in Sep 2020 (month after joining Google). I’m not too sure why I didn’t publish it back then.

I picked the book called “Software Engineering at Google” by a recommendation by one of my colleagues. Because the book is written for outsiders for me, as a Noogler, it bridges the gap between my prior external experience and knowledge and internals of Google that I start experiencing.

Some criticism? Or course. Personally I think the book is way too long for the content it contains. Style changes with each chapter. Some are written like they were someone’s life story, some are written like publications, yet some are written like promotions of a software product. I’m not telling someone did a bad job, it is just a natural outcome of multiple people writing a book.

Do I recommend the book? Yes! Though, you would gain most from reading it provided you: 1) are a software engineer AND 2) (started working at Google OR are interested in Google OR are working for another big tech company). I would say to hold reading the book till later if you have little to none industry experience and if are not working for a big software company. I think it would be hard to relate to many problems described in the book without prior experience. For example, the book would talk about source control systems and compares between distributed and centralized ones and provides an explanation why a monorepo works well at Google.

The book is not a software engineering manual, although it covers many software engineering topics. Things are mostly covered from Google’s point of view. This means that some problems might not be applicable to you and even for those problems that are applicable, solutions might not be feasible or reasonable. Most software companies hardly ever need to solve for scale of software engineers working for them, but at Google there are 10th of thousandths of SWEs, so this becomes important.

The book’s thesis is that Software Engineering extends over programming to include maintenance of software solutions.

The below is not so much book review, but rather me taking notes. Some of the below are quotes. Some of them are small comments and thoughts. Skim through it to get an idea of what’s in the book.

Thesis

- Question “What is the expected life span of your code?”

- Things change and what was efficient might no longer be.

- Scale Everything, including human time, CPU, Storage

- Hyrum’s Law: https://www.hyrumslaw.com/With a sufficient number of users of an API, it does not matter what you promise in the contract: all observable behaviors of your system will be depended on my somebody.

- Example with ordering inside of a hashmap

- “Churn Rule” – expertise scales better, this is probably why EngProd exists.

- Beyounce Rule – cover with CI what you like. For example, compiler upgrades.

- Things are cheaper at the start of a project.

- Decisions have to be made even if data is not there.

- “Jevons Paradox” – “Jevons paradox occurs when technological progress or government policy increases the efficiency with which a resource is used (reducing the amount necessary for any one use), but the rate of consumption of that resource rises due to increasing demand.” – wiki

- Time vs. scale. Small changes; no forking

- Figure with timeline of the developer workflow

- Google is data driven company, but by data we also mean, evidence, precedent, and argument.

- Leaders that admit mistakes are more respected.

Culture

- Genius is a myth. It exists because of our inherent insecurities. Many technology celebrities made there achievements by working with others. Don’t hide in your cave.

- Hiding is considered harmful (example with bike gear shifter).

- Bus factor

- Three Pillars of social interaction: humility, respect, trust.

- Techniques: rephrase critizism, fail fast and often

- Blameless

- Politicians never admit mistakes but they are on a battlefield

- Googley: thrives in ambiguity, values feedback, challenges status quo, puts the user first, cares about the team, does the right thing

Knowledge Sharing

- Challenges: lack of psychological safety, information islands, duplication, skew, and fragmentation, single point of failure, all-or-nothing, parroting (copy-paste without understanding), haunted graveyards

- Documenting knowledge scales better than one-to-many sharing by an expert, but an expert might be better at distilling all of the knowledge to particular use-case

- Psychological safety is the most important part of an effective team. Everyone should be comfortable asking questions. Personal comment: I find myself guilty of not always providing safe environment for my colleagues in the past I would use “well-actually” and frequent interuptions.

- Biggest mistake for beginners is not to ask questions immediately and continuing to struggle.

- 1-to-1 doesn’t always scale so community and chat groups can be used instead.

- To provide techtalks and classes few conditions are needed to justify them: complicated enough topic, stable, teachers needed to answer questions,

- Documentation is vital part of keeping and sharing knowledge

- “No Jerks”. Google nurchures culture of respect.

- Google provides incentives to share knowledge (for example peer bonus, kudos, etc).

- go/ links and codelabs

- Newsletters have their nieche of delivering information

- code readability process. I personally was always looking for way to make code look more uniformly throughout projects I worked on. And here we are this is implemented on the scale of entire large company. trade-off of increased short-term code-review latency for the long-term payoffs of higher quality code

Engineering for Equity

- Unconscious bias is always present and big companies have to acknowledge its presence and work towards ensuring equality.

- Google strives to build for everyone. AI based solutions are still behind and still include bias.

- It is ok to challenge existing processes to ensure greater equity.

How to Lead a Team

A really great chapter.

- Roles are Google are: The Engineering Manager (people manager), The Tech Lead (tech aspects), The Tech Lead Manager (mix)

- Technical people often avoid managerial roles as they are afraid to spend less time coding

- “Above all, resist the urge to manage” – Steve Vinter

- “Traditional managers worry about how to get things done, whereas great managers worry about things get done (and trust their team to figure out how to do it).”

- Antipaterns:

- Hire pushovers

- Ignore Low Performers

- Ignore Human Issues

- Be Everyone’s Friend (people may feel pressure to artificially reciprocate gestures of friendship).

- Compromise the Hiring Bar

- Treat your Team Like Children

- Patterns:

- Lose the Ego – gain team’s trust by apologizing when you make mistakes, not pretending to know everything, and not migromanaging.

- Be a Zen Manager – being skeptical might be something to tone down as you transition to a manager. Being calm is very important as it spreads. Ask questions.

- Be a Catalyst – build consensus.

- Remove Roadblocks – In many cases, knowing the right person can be more valuable than knowing how to solve a particular problem.

- Be a Teacher and a Mentor

- Set Clear Goals – entire team has to agree on some direction

- Be Honest – Google advises against “compliment sandwich” as it might not deliver the message. Example with analogy of stopping trains.

- Track happiness – A good way is to always ask at the end of 1:1 “What do you need?”

- Delegate, but get your hands dirty

- Don’t wait, make waves when needed

- Shield your team from chaos

- Let the team know when they do well

- People Are Like Plants – very interesting analogy

- Intrinsic vs. Extrinsic Motivation

Leading at Scale

- Three “always”: Always be Deciding, Always be Leaving, Always be Scaling

- Always Be Deciding is about trade-offs (plane story); best decision at the moment; decide and then iterate

- Always be Leaving is about not being single point of failure, avoiding bus factors

- Product is a solution to a problem. Make teams work on problems not products that can be short lived.

- The cycle of success: analysis, struggle, traction, reward ( which is a new problem)

- Important vs. Urgent – gtd, dedicated time scheduling, tracking system

- Top leaders should learn to deliberately drop some balls and conserve their energy. It is good enough to focus on 20% of things.

Measuring Engineering Productivity

- Google decided that having dedicated team of experts focusing on eng productivity is great idea. Entire research was even dedicated to study productivity.

- Case-study: readability

- Google measures using Goals/Signals/Metrics

- Signal is the way in which we will know we have achieved our goal

- Metric is exactly how we measure signals

- Actions Google takes are “tool driven” – tools have to support new things instead of just telling engineers to change anything

- For qualitative metrics consider surveys

Style Guides and Rules

- Google has style guildes which are more than just about style but more about rules

- Rules have to be: optimized for the reader of the code, have weight and not include unnecessary details, and have to be consistent.

- Example when rules can change is when this is at scale and for very long periods of time. For example, C++ rules, with an example of starting to use std::unique_ptr

Code Review

- This covers typical code review flow. Snapshots and LGTM.

- There are three aspects: any other engineer’s LGTM, owners LGTM, readability LGTM. Three can come from one person

- Code Review benefits: code correctness, code is comprehensible, consistency, ownership promotion, knowledge sharing, historical record.

- Code reviews help with validation for people who suffer from imposter syndrome.

- There is a big emphasis on writing small changes

- Write good change descriptions

- Adding more reviewers leads to diminishing returns

- Code review buckets: greenfield, behavioural changes, bug fixes, refactorings and large scale changes

Documentation

- Most documentation comes in form on code comments

- Documentation scales over time

- Arguably documentation is like code, so it should be treated like code (policies, source control, reviews, issue tracker)

- Wiki-style documentation didn’t work out because there were no clear owners, therefore documents became obsolete, duplicates appeared. This grew into number one complain.

- Documentation has to be audience oriented.

- Types of documents: reference documentation (what is code doing), design, tutorials (clear to reader), conceptual docs, landing pages (only links to other pages).

- Good document should answer: WHO (audience), WHAT (purpose), WHEN (date), WHERE (location of doc, implicit), WHY (what will it help with after reading).

- “CAP theorem” of document: Completeness, Accuracy, Clarity.

- Technical writes are helpful documenting on the boundaries between teams/APIs but are not great asset for team specific documentation.

Testing Overview

- What does it mean for a test to be small? It can be measured in two dimensions: size (can be defined by where they run: process, machine, wherever) and scope.

- Small – single process (no db, no IO, preferably single-thread); Medium – single machine, no network calls, Large – no restrictions. This can be enforced by attributing tests and frameworks that run tests.

- Scope is how much code is evaluated by a test.

- Flakiness can be avoided by multiple runs – this is trading CPU time for engineering time.

- It is discouraged to have control flows in tests (if statements, etc).

- Testing is a cultural norm at Google.

Unit Testing

- Aim for 80% UT and 20%broader tests.

- Tests have to be maintainable.

- Big emphasis on testing only via public API.

- Test behaviors and not methods.

- Jasmine is a great example of behavior driven testing.

- A little of duplication in tests is OK; not just DRY. Follow DAMP over DRY.

Test Doubles

- Seams – way to make code testable; effectively DI.

- Mocking: fake (similar to real impl); stub – specify return values; interaction testing (assert was called)

- Google’s first choice for tests is to use real implementations. Arguable this classical testing approach is more scalable.

- Fakes at Google are written and have to have tests of their own.

- It is fair to ask owners of an API to create fakes.

- Interaction testing is not preferred though warranted if no fake exists or we want to count number of calls (state won’t change).

Larger Testing

- General structure of a large test: obtain system under test (SUT), seed necessary test data, perform actions using the SUT, verify behaviors

- Larger Tests are necessary as UT cannot cover the scope of complicated interactions

- Larger tests could be of different types: performance, load, stress testing; deployment configuration testing; exploratory testing; UAT; A/B diff testing; probes and canary; disaster recovery testing; user evaluation; etc.

Deprecation

- Code is a liability, not an asset.

- Migrating to new systems is expensive. In-place refactorings and incremental deprecations can keep systems running while making it easier to deliver value to users.

- Deprecations: advisory and compulsory.

- For compulsory deprecation is it always best to have a team of experts responsible for this.

Version Control and Branch management

- Google got stuck with centralized VCS. The book argues that there really are no distributed VCS, as all of them would require a single source of truth

- I used to work with both centralized and distributed systems. What google does is even a bit more different as branching is not encouraged at all

- Sometimes it is ok to have two source of truth. For instance, Red Hat and Google can have their own versions of Linux

- Arguably if UT, IntegrationTests, CI and other policies are in place dev branches are not needed

- Other disadvantages of dev branching is difficulty of testing and isolating problems after merges

- “One Version” – for every in our repository, there must be only one version of that dependency to choose

- Hopefully in future OSS will arrive at virtual mono-repo with “One-Version” policy which will hugely simply OSS development over next 10-20 yearst

Static Analysis

- Static Analysis has to be scalable and incremental running analysis only on relevant parts

Dependency Management

- Managing dependencies is hard. Big companies come up with their unique solutions.

- A dependency is a contract so if you provide one this will cost you.

- SemVer is a standard in versioning dependencies but is only an attempt to ensure compatibility.

- Compatibility can also be ensured using test harnesses.

Large Scale Changes

- LSC might be semi-unique to Google and other super-large companies but at this scale changing int32 to int64 everywhere in code base has to have special processes in place.

Continuous Integration

- Everyone does CI these days and CI systems become ever more complex.

- Actionable feedback from CI is critical.

Continious Delivery

- “Velocity is a team sport” of reliably and incrementally delivering new value fast.

- Ways to achive this are: evaluating changes in isolation; shipping only what gets used; shifting left (more more things to earlier in the pipeline); ship early and often.

Compute as a Service

- Container based architecture adapted to a distributed and managed environment with common infrastracture is a solution to handling scale.

2021 Recap / 2022 Plan

January 1, 2022 YearPlanReport 12 comments

2021

2021 was the year we had high hopes for. It was also the year when so many of these hopes have been crushed. Other than COVID, just here in BC, Canada where I live, we had the record high temperatures burning entire towns to ashes and floods of the century cutting us from the rest of Canada. I believe the year like that raised awareness of so many critical issues of the contemporary world, like climate change, politicization, mental health, etc, but most of all it forced us to reevaluate life priorities and think twice about what is important in our lives.

In the year 2021 I spent more time with my family, focused on health and tried to live a good life despite things getting hard mentally at times.

2021 In Numbers

I worked out 365 days last year, including 148 rock climbing gym visits (my climbing stuff), 78 runs, 87 hours of weight training, and many other activies, averaging 55 hours a month; set some new personal records like 20 pullups and sub-50min 10k, briefly reached 56 VO2max and 68kg of weight but retreated on the last two; read 20+ books, many of which are technical but also included all 7 Harry Potter books I read with my daughter. Learned some C++ and subjectively did well at work. I straightened my teeth, tried real rock climbing and pole calisthenics. Invested 20% more money in 2021 than I did in 2020 and my early retierement aspirations at age 41 are now more realistic than ever. I failed to quit coffee but successfully reduced it consumption. I also failed to write enough on this blog and failed to inspire people as I might have done years ago.

Complete list of all items from 2021

- Read all 7 Harry Potter books to my 7y old daughter. That’s 199 chapters making it roughly 4 chapters (65p) per week.

- DONE. I read few first books myself and then my daughter (8) get so much interested she started to read on her own.

- Teach my daughter basics of programming. Snake game seems to be a good candidate for a year’s project goal.

- We had two sessions with my daugher where we created a simple “food” for the snake and are drawing grid. I consider this a failure as this isn’t what I imagined.

- Teach my 4y old son basics of skiing. Let’s see how many times I can take him out skiing.

- Took him 3 times in total (second season – yeap he tries skies at age 3 for the first time). He alreay learned to ski “pizza”-style. I guess I’m better teacher this time. I used ski-wedges (rubber string to keep front of the skies together) and I’m not making the mistake of holding him so that he learns to keep the balance right from the beginning. I should probably classify this as failure, even there was some progress.

- Travel outside of Canada and have a boring beach vacation. Fingers crossed.

- :(

- Have two camping road-trips (long weekends count, but a week+ would be awesome).

- DONE. One weekend long and another almost a week long, though with hotels in between.

- Set new personal running records (considerations 1K, 1Mile, 5K, 10K and Half-Marathon). Consider running a Marathon (that’s not a healthy distance imo, but people keep asking me if I ran it and I cannot say yes… ogh…).

- DONE. 79 runs. Set new 5K – 22:43, 10K – 49:49 PR. I will consider this done.

- Improve VO2Max to 60 ml/(kg·min) from the current 54. This means I will have to specifically and properly train. Just running 100 times won’t cut it. In combination with my other goals of gaining muscle weight this goal becomes even more difficult.

- Max achieved 56. It seem to be unrealistic to achieve 60 for me due to biological limitations and my age or it would take a lot more dedication for me, which I’m not ready to do.

- Try out at least one other sport activity. Considerations are: mountain biking, martial arts, archery, whatever, anything counts as long as I try it out.

- DONE. Tried real rock climbing, kickboxing, and pole-calisthenics, bought a bike and biked super-easy mountain trails.

- Learn free handstands and snap some cool pics for the next year’s post.

- Failed. I made some progress, like I can do low control handstand for few seconds, but that’s not what I wanted.

- Strength train for 100 hours inclining towards climbing specifics (finger strength; pull-ups; core). Set new personal pullup (19+) and pushup (102+) records.

- DONE. Way over 100 hours. I calculated > 83hours of separate strength training time, plus there would be maybe another 50 or more of those coming from my climbing activies. New pull-ups PR 20. Didn’t do the pushups.

- Gain 5kg of pure muscles (70kg, BMI of 22, fat 10-13%) and post a shirtless comparing pic.

- Max achieved was 68. I’m 10.3% body fat. I will delay posting a comparison pic to the next year, though if you are super curious there is some arms stuff in strava.

- See how far I can get in climbing. Target sending one V8 (7B Font, HEX-5/6) bouldering problem. If this doesn’t say you anything, it is a super-ambitious goal as I can currently do V3s, some V4s. Normally V8 requires years of training and lots of strength. Likely to fail. Shoot for the starts.

- DONE (kind-off): rock-climbed 148 times. Sent a couple of HEX-5 (V6-V8, probably these were 7A and not 7B).

- Continue sleeping full 8.5 hours.

- DONE. Based on garmin data I kept sleeping 8.5+ hours for entire year with few exceptions.

- Straighten my teeth to a perfect arch.

- DONE. My teeth are straight now. I’m still wearing last sets of aligners and then will need to wear retainers for some time, but the work is done! Smily pictures are coming next year.

- Further reduce coffee. Cold turkey is a real option this year.

- Had 2 months with 0 coffee intake (Jan, Feb), and many months with few coffees. By the end of the year I’m basically back to daily sonsumption. This means I’m a coffee addict, but a controlled one, or is this not a defintion of an addict? hm..

- Build a strong disciplined morning routine, that includes among other things waking within 5 minutes of alarm going off, drinking full glass of water, short meditation and a full breakfast.

- Big failure. No real discipline, though I do have some kind of routine. I stopped having an alarm. Yeap. I do not have any alarms set and this makes me feel great.

- Write 10 blog posts, of which at least 5 are of a technical content.

- Failure.

- Listen to/read 10 books, of which at least 5 are technical/career ones. (Harry Potter ones don’t count).

- Books done: “Time Smart” “Effective C++” “Effective Modern C++” (partially) “Change by Design” “The Manager’s Path” “The Body Keeps the Score” “Fifty Inventions That Shaped The Modern Economy” “Sapiens” “The Art of Thinking Clearly” “The great mental models: Vol 2” “The Ride of a Lifetime” “The Pragmatic Programmer” (relisten) “Finding Flow” and few other funny ones I’m not brave enough to post here

- Solve 100 leet code problems (min 10 hard; min 50 medium). Got to keep myself in a good shape, right?

- Solved 44 problems. Not much. (total lifetime solved 565)

- Deliberately work on loosing my eastern European accent for at least 10 hours. A course with a real teacher would be great.

- Failed. Was close to having scheduled classes.

- Create a monetizable content and earn 1$. This can be a course on programming, an e-book, a problem solving tutorial, anything really.

- Failed. Lack of specificity and dedication to this one.

- Become fully proficient in one more programming laguage.

- DONE: Gained C/C++ readability badge at work. It kind of means Google now trusts me to write C++ code :) I also finally fill more-or-less comfortable in this language.

- Learn Linux. I use Linux every day at work, but I’m limited to basic things and have never deliberately tried to understand this operating system or become proficient in it.

- Failed. Linux is now my main non-work operating system. Though still not what I meant.

- Learn to complete all work within strickly 8 working hours. Less of stretching of time, less of inefficiencies, less of distruction, more discipline. Efficiency. Time is our most precious resource.

- Mar: March was probably one of the most efficient months for me at Google so far. Feb: I’m tracking my time super-precisely and can tell there is close to 0 of time-wasters (news, social, etc) during work hours. Oct: This goes “oke-ish”. Calling this a success even I don’t feel I work enough. My employer seems to be happy, though.

- Better track money. I’ve been tracking money since my first paychecks, but in the year 2020 I lost my routines so need to reestablish them.

- Mar: on track. Feb: Back on track. Rewamped my “balance and net worth” sheets. Oct: Not sharing too much but I have a hold on money tracking and investing. It is just maybe I can make more if, say, I worked in US or something.

- Make or lose 1000$ in a risky investment. Failures are learning experiences.

- DONE: I have some crypto ETFs that jumped like hell and I didn’t budge. Jumps were X*1000 so I’m covered for this goal. I have some confidence in risk tollerance now. And no, I do not believe in all this crypto hype.

- Invest 20% more money in 2021 than in 2020.

- DONE.

- Create a painting and/or a drawing. I already know some basics. Just want to do something to really like.

- Failed. I just didn’t feel like doing this for entire year. This has something to do with the feelings.

Learning from 2021

Last year taught me that seemingly “impossible” things are possible as long as you are truly focused on them (by the example of having a physical activity every day), and at the same time many possible things are left undone because they lacked dedication, proper tracking and habit forming aspects to it or were just wishful thinking.

2022 Plan

I’ve been writing new year resolutions since 2010 and my success rate has increased progressively.

Process

Quantify. Wishful untracked resolutions don’t work. Instead of creating a resolution like “improve financial situation”, which is a good idea, try to understand why you want it, what you are willing to sacrifice to achieve it and then crunch the numbers and compile a list of quantifiable, prioritized and trackable results you want to see by the end of the year (ie. “max out pension plan”, “buy asset G”, “invest % into Y”, “get salary hike by doing X”).

Track your list. I am thankful to my friends who kept tabs on me (AnAustrian :) ) because this allowed me to be accountable. I propose a monthly routine when you go back to your plan, update the status and re-review the validity of the goals. I personally do this via google sheets.

Another modification I’ve made to my resolutions is that I set success criteria which usually is 50% of the list, and believe me it is enough to keep myself sweating for the entire year.

Plan

For the year 2022 I have a list of 70+ items. I know, I know, having too many items doesn’t sound like a smart idea, but there is merit to it. The list I created is categorized into health, family, career, life quality, etc and then each of the items was given a priority on a scale 0-4 (0 = highest; 4 = lowest). I ended up with just 10 items I think are truly important. Success criteria is to complete highest priority items but have a possibility to replace some of them with multiple lower priority items. (More exactly, I want to collect 10 points, where P0=1point, P1=1/2, P2=1/3, P3=1/4, P4=1/5)

This year my private goals are mixed with career and all other goals into a single spreadsheet, but in order to share the list I masked some of the items that I’m not comfortable sharing. The below list is not set in stone, but it will be my go-to list any time of the year, any time of the day.

1 | Prio | Area | Key Result |

|---|---|---|---|

2 | 0 | Career | 7B9CBA2A-A29F-48AA-A633-10C4C58CBDB3 |

3 | 0 | Discipline | Get up out of bed immediately after waking up no daydreaming in bed (move that to night) |

4 | 0 | Health | Maintain 8.5 sleep time |

5 | 0 | Health | Train every day for 30+ minutes (for once a week rest day stretching and rolling is ok) |

6 | 0 | Health | Eat balanced 3 times a day with 1-2 times protein shake in between |

7 | 0 | Socioeconomic | 10168043-A42B-464F-AB08-5A255EBE7B30 |

8 | 0 | Relationships | Train not to dwelve on the past 2D763C64-D1C4-4C38-9EF9-E6CF2D6846E7 |

9 | 0 | Family | Travel to Ukraine |

10 | 0 | Family | Increase family time by doing one fun activity or playing board games at least 2 times a month |

11 | 0 | Intellect | Distill best remembered ideas from all the books I have ever read and compile them into a memo |

12 | 1 | Climbing | Have more fun by fixing mental constraints: fear of judjement, not trying enough, grade fixing |

13 | 1 | Career | 3E54870A-D001-4C17-964F-4EA0BD8E4394 |

14 | 1 | Career | Read paper book “Designing Data-Intensive Applications” aloud while listening to its audio in order to improve accent |

15 | 1 | Career | 6EA04241-F23B-4AAF-B217-D734486B05F3 |

16 | 1 | Career | 3E8721D5-7220-4A2D-ADC2-0CEA82940C26 |

17 | 1 | Career | 14508A37-2C76-40B5-934D-DC13BAB9317B 2023 |

18 | 1 | Climbing | Can do all HEX-4, and approx half HEX-5 (consistency) |

19 | 1 | Family | Sign up son for martial arts classes |

20 | 1 | Family | Teach daughter basics of financial literacy |

21 | 1 | Family | Ensure daughter enjoys Taekwoondo or change her activity |

22 | 1 | Family | Add 1-2 short term activity for daughter (camps) |

23 | 1 | Family | Teach kids some programming in a fun way |

24 | 1 | Fun | Try out 5 new activities or experiences |

25 | 1 | Health | Engage in “positive thinking” sessions as part of the bed hygene procedure and tell spouse something nice |

26 | 1 | Health | Lower coffein intake to 1/2 shot Jan-Mar |

27 | 1 | Health | Maintain body fat of <12% |

28 | 1 | Health | 150+ min of active minutes a week (running avg. 2 times a week) |

29 | 1 | Intellect | Read 5 new books |

30 | 1 | Socioeconomic | 27CBCCC7-ECAC-48E8-84AC-70C80D45FFD2 |

31 | 1 | Socioeconomic | 71AE4608-B769-47D7-8B22-5D5A93118E4D |

32 | 1 | Relationships | 158573E6-8598-49E1-9949-F9D29DA5B5FA |

33 | 1 | Self-esteem | Be able to hold handstand for 5 sec |

34 | 1 | Travel | Travel to Namibia |

35 | 1 | Self-esteem | Whiten teeth |

36 | 2 | Discipline | Lower coffein intake to meetings with friends only Apr-Jun |

37 | 2 | Career | Solve 100 medium leet code problems by May |

38 | 2 | Career | Find 1-2 best system design youtube channels and compile 10 common system design approaches post |

39 | 2 | Climbing | Climb outdoors 5+ times |

40 | 2 | Discipline | Quit coffee Jul-Dec |

41 | 2 | Discipline | Maintain near 0 usage of Facebook |

42 | 2 | Discipline | Instagram only enough to suffice climbing goal and opened only when making a new post |

43 | 2 | Socioeconomic | Max out RRSP |

44 | 2 | Socioeconomic | Max out TFSA for myself and spouse |

45 | 2 | Relationships | Get to know at least 5 other climbers (name + casual chat) |

46 | 2 | Relationships | Cheer up 1+ person during each climbing session (“nice job”, “well done”, “sick drop knee”, etc) |

47 | 2 | Relationships | Train not wasting time in fantazies (deferred to night only) |

48 | 2 | Relationships | 0F2AD72D-13C1-4014-A96B-2DFA5C907ADF |

49 | 2 | Relationships | C5CEA567-DAB5-4466-8CF3-3BCC4E423120 |

50 | 2 | Self-esteem | Lower body fat to 8%-10% by Jun |

51 | 2 | Self-esteem | Increase chest chircumference to 100cm from 95cm |

52 | 2 | Self-esteem | Add body weight to suffice curcumference goals but not more than 72kg |

53 | 2 | Self-esteem | Be able to hold front lever for 5 sec |

54 | 2 | Travel | Travel to Austria |

55 | 3 | Career | Fill-in L5->L6 gap analysis by end of Jan |

56 | 3 | Climbing | Climb at least one 5.12+ top rope in a gym |

57 | 3 | Climbing | Read “Training for Climbing” (TFC) completely |

58 | 3 | Climbing | Incorporate TFC intermediate course into training |

59 | 3 | Climbing | Get lead-climb certificate |

60 | 3 | Discipline | Improve sleep preparation hygene by 0 screen time ~1 hour before bed and everyday foam rolling |

61 | 3 | Family | Go skiing together as a family once |

62 | 3 | Relationships | 686B09E4-9238-4E91-AF08-31E27C5B4A83 |

63 | 3 | Self-esteem | Increase shoulder circumference to 120cm from 112cm |

64 | 3 | Self-esteem | Maintain waist circumference of 78cm |

65 | 3 | Travel | Camping trip in Canada |

66 | 3 | Travel | Short US trip |

67 | 4 | Climbing | Create climbing specific instagram account and reach 1K followers |

68 | 4 | Fun | Fun does not mandatory involve planning like this, so do more fun for the sake of fun |

69 | 4 | Self-esteem | Achieve semi-visual abs |

70 | 4 | Self-esteem | Improve posture by going to massage therapy and doing antagonistic exercises |

71 | 4 | Career | Write 5 blog posts |

72 | 4 | Self-esteem | Try out a new haircut |

The Why

Now the above maniacally long list doesn’t tell the whole story. “The why” is often much more important than what or how. Each of the categories has its intent and it is a fairly simple long term directional objective:

| Area | Objective |

| Health | Maintain and improve health in order to keep the energy and live longer |

| Family | Work towards healthier family dynamics and setup kids for success later in life |

| Career | Prepare advancement in career or other meaningful career change for better compensation and self-realization |

| Socioeconomic | Improve socioeconomic situation to improve quality of life |

| Climbing | Climb harder to gain sense of sport achievement and improve life satisfaction |

| Discipline | Improve discipline in time management and funnel extra time into personal development |

| Intellect | Increase knowlege for greater leverage in life |

| Travel | Travel more in order to improve satisfaction with life |

| Relationships | Improve relationships with friends to minimize regrets later in life |

| Fun | Have more fun for the sake of fun |

| Self-esteem | Improve physical attractiveness and physique to feel better |

Happy New Year

May the new 2022 year be the year of great achievements for you!

Share your goals and let’s keep each other accountable.

2020 Recap / 2021 Plan

January 1, 2021 Success, YearPlanReport 13 comments

My life in 2020 continued to be boring. With notable career changes (SDE3 promotion at Amazon; move to Google) the year was mostly uneventful. It was not an easy ride emotionally though externally for the most part I lived the life of a fisherman from the “Business and the Fisherman” parable.

I’ve been making new year resolutions and publishing them online since 2010 and learning the hard way what you might have guessed: I failed miserably so many times I should have already given up on them :) but no, this is yet another one. Oh… and wait… this is the first time I succeeded in my new year’s resolution.

If you are skeptical of the new year resolutions I accept your point of view as resolutions don’t generally work (90% of people fail) and I admire you if you manage to succeed in your aspirations despite not having a plan. Someone said that you have to be inspired or desperate in life. If you are neither at the moment, creating a plan and following through might be the best option until your enlightenment.

2020 Recap

TL;DR: promoted to SDE3 at Amazon; moved to Google; ran, skied, climbed much more than planned; still on a gradual trajectory of healthy and early retirement; didn’t read or learn as much as I wanted; traveled locally.

My last year’s resolution was to complete 12 of all 24 of the items on the list I had. Succeeded in 14 of them and if I add up percentages completion goes all the way to 94%. Here is the list:

- [Edit 29Jan2020] Spend more quality time with kids in 2020 than in 2019 as measured by wife’s opinion

- Although I usually don’t put private and family goals in my plan I had to include this one for my fellow Austrian friend ;). This one is subjective, but accordingly to my wife I can count this one as completed. Coronavirus made me stay at home so a side effect of WFH I’m spending much more time with my kids. Luckily they usually stay away from participating in my work meetings.

- Travel

a distant country (candidate New Zealand, other places count)Changed thsi to be a long 2+ weeks roadtrip.- We had an amazing 2 week camping road-trip to the north of British Columbia crossing into Yukon and coming back via Alberta. We managed to see the Northern Lights, wild bizons, 17 bears in one day, glacies, waterfalls and other things of beauty. We even went hunting for dinosaur fossils. More details in my wife’s blog post over here.

- Write 24 blog posts, of which at least 12 are of a technical content

- I wrote 13 blog posts. Only 5 out of these posts are of technical content. Counting this item as half completed.

- Listen to/read 24 books

-

Read 16 books:

- Why We Sleep

- Algorithms for Interviews

- Competitive Programming Guide

- Freakonomics

- Atomic Habits (once again)

- Switch- How to change things when change is hard

- The Phoenix Project

- Feeling Good-The New Mood Therapy

- Limitless-Upgrade your brain

- Software Engineering At Google

- The Alchemist: A Fable About Following Your Dream

- Measure What Matters: How Google, Bono, and the Gates Foundation Rock the World with OKRs

- The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness

- Shoe Dog: A Memoir by the Creator of Nike

- Super Human

- Clean Architecture

-

5 books are still in progress and I’m likely to abandon reading some of them:

- Finite and Infinite Games

- Deep Learning with Python

- Effective C++

- The Go Programming Language

- ***

-

Read 16 books:

- Run 52 times

and take part in a race (candidate VanSunRun on 19April)- I ran 110 times out of those planned 52. Does it mean 211% completion then?

- New PRs: Half 1:58:07, 10K 50:50, 1K 3:47

- Total run distance: 792K

- Ski 12 days (night skiing after work counts)

- Went skiing 17 times. Could tell that I improved my skills a bit.

- Improve swimming by going 10 times to swimming pool (consider a course)

- This one is a complete failure. I postponed signing up for classes and then COVID hit and then I didn’t bother. Not counting lake swimming here as that is not what I meant when creating this plan.

- Learn to walk on hands

- Although I failed to learn walking on hands and even handstands I made good progress towards it.

- Can do controlled headstands (see the picture above).

- Work out 104 hours

- I’ve done 69 hours or workouts. These workouts are mostly weight lifting and calisthenics including occasional stretching sessions. This does not include running or rock climbing.

- Gain another 7kg of pure muscles (70kg, BMI of 22, fat 11-15%)

- Max measured weight was 65.8, but more realistically I weight 65. This once again proved that it is difficult for me to gain weight.

- Although I am confident these +2KG are musles as I can effectively see musles I have never seen on my body :)

- Drive a racing car

- I drove race adapted 8 cylinder mustang on a racing track in Mission. link

- Go indoor climbing 10+ times, learn to do 5.10+

YDS and V4+ Hueco (USA)

- This is similar to running in a way that I overachieved this goal. Unfortunately I don’t even know how many times I went rock climbing but rough approximation brings me to ~50 times. In fact right now I’m a member to two different climbing gym networks with access to 6 locations.

- Completed 5.11 and one V5.

- Some adrenaline rush thingy (skydive, bungee, paraglide, etc)

- This is one of those things I didn’t get to. I didn’t really want to bungee jump again or skydive as I’ve done those in the past and couldn’t find anything that would suit. Probably another jump would have done :(

- Learn a programming language (tiny project counts ~3 blog posts)

- Probably need to count this as a failure. At my current job I’ve already written code in Python, Go, C++ – all are languages I’m not comfortable with, but I don’t think I can say I’ve “learned” any of them.

- (re)-introduce myself to Machine Learning (basics + TensorFlow)

- I am not doing anything related to ML and therefore it is hard to push myself to learn anything in this area. Failure.

- Sleep 8-9 hours, but learn to get up with damn alarm instantly (15 sec). This one might be the most challenging as this

is a horrible habit of mine (hitting “snooze” for 2 hours)

- Definitely succeeded in getting enough sleep but still failing miserably in waking right after the alarm.

- Limit read-only social media activities to 2 hours a week (scrolling Facebook/Twitter/Instagram/LinkedIn, excl. posting

and messaging people) as to produce instead of consuming

- Although I almost never open Facebook or Twitter, my instagram is still a lot of timewaste. In any case I probably should count this a success as I managed to controll how much time I spend here. For instance, those apps are blocked during my working hours and outside of working ours time is limited by digital wellbeing by Google.

- Meaningful work related change

- Solve 100 leet code problems

- Solved 67% of problems. Here is a link to my profile.

- Visit a tech conference and/or some tech meetup(s) (2+ counts)

- Attended one internal virtual Google conference.

- Quit regular money wasters (going out for lunch, 5$ coffee, etc, max 1 a week or 52 in a year)

- Due to COVID I’m not going out for lunches or coffees. I do not smoke or drink alcohol. On average I probably had 1.0-1.5 coffee outside per week. I think my new regular money wasters are useless things bought at Amazon.

- Reduce coffee (max 1 per day); best if I could go cold turkey

- March started drinking coffee every day at home again (damn due to COVID-19). In August maybe had 1-2 coffees. 1 in September. Few in October. Some in November. To generalize I would say I definitely reduced my coffee consumption, though I didn’t manage to go cold turkey.

- Increase focus at work (track screen time and distractions)

- I’ve done few things to achieve this: 1) limited my social apps on phone; 2) separated physically work laptop and access from private laptop and access; 3) organized my home office. More here.

- Invest 20% more money in 2020 than in 2019

- Portion of my RSU started vested in Jan putting me ahead for that month; Feb just regular contributions; March invested lump sum of 10% of my base salary into what was a crazy bear market (call me crazy) maybe recession and it turned out to be great timing. Didn’t invest in April other then regular retirement contributions. As of September maxed out my RRSP and added some money to my investments. Plus I got my next portion of Amazon shares which I didn’t sell. Not selling my GOOG stock.

2021 Plan

TL;DR: more quality time with family; more sport; more health; more of professional focus and learning; some travel; less reading; more passive money.

So what’s on the cards for the year 2021? I already have a good life so it is reasonable to maintain the things I learned to do, slowly improve the things I would like to. The complete list is below:

Updated: 26-Dec-2021

- Read all 7 Harry Potter books to my 7y old daughter. That’s 199 chapters making it roughly 4 chapters (65p) per week.

- DONE

- Teach my daughter basics of programming. Snake game seems to be a good candidate for a year’s project goal.

- We had two sessions with my daugher where we created a simple “food” for the snake and are drawing grid.

- Teach my 4y old son basics of skiing. Let’s see how many times I can take him out skiing.

- Mar: Took him 3 times in total. He alreay learned to ski “pizza”-style. I guess I’m better teacher this time. I’m using ski-wedges (rubber string to keep front of the skies together) and I’m not making the mistake of holding him so that he learns to keep the balance right from the beginning. I should probably classify this as failure, even there was some progress?

- Travel outside of Canada and have a boring beach vacation. Fingers crossed.

- Not happened.

- Have two camping road-trips (long weekends count, but a week+ would be awesome).

- DONE. One weekend long and another almost a week long, though with hotels in between.

- Set new personal running records (considerations 1K, 1Mile, 5K, 10K and Half-Marathon). Consider running a Marathon (that’s not a healthy distance imo, but people keep asking me if I ran it and I cannot say yes… ogh…).

- 79 runs. Set new 5K – 22:43, 10K – 49:49 PR. I will consider this done.

- Improve VO2Max to 60 ml/(kg·min) from the current 54. This means I will have to specifically and properly train. Just running 100 times won’t cut it. In combination with my other goals of gaining muscle weight this goal becomes even more difficult.

- Max achieved 56. (It seem to be unrealistic to achieve 60 or it would take a lot more dedication for me).

- Try out at least one other sport activity. Considerations are: mountain biking, martial arts, archery, whatever, anything counts as long as I try it out.

- DONE. Tried real rock climbing, kickboxing, and pole-thingy, bought a bike and biked super-easy mountain trails.

- Learn free handstands and snap some cool pics for the next year’s post.

- Failed. I made some progress – few low control seconds.

- Strength train for 100 hours inclining towards climbing specifics (finger strength; pull-ups; core). Set new personal pullup (19+) and pushup (102+) records.

- Way over 100 hours. I calculated > 83hours of separate strength training time, plus there would be maybe another 50 or more of those coming from my climbing activies. New pull-ups PR 20. Didn’t do the pushups.

- Gain 5kg of pure muscles (70kg, BMI of 22, fat 10-13%) and post a shirtless comparing pic.

- Max achieved was 68. I’m 10.3% body fat. I will delay posting a comparison pic to the next year.

- See how far I can get in climbing. Target sending one V8 (7B Font, HEX-5/6) bouldering problem. If this doesn’t say you anything, it is a super-ambitious goal as I can currently do V3s, some V4s. Normally V8 requires years of training and lots of strength. Likely to fail. Shoot for the starts.

- DONE (kind-off): rock-climbed 148 times. Sent a couple of HEX-5 (V6-V8, probably these were 7A and not 7B).

- Continue sleeping full 8.5 hours.

- DONE (based on garmin data I kept sleeping 8.5+ hours for entire year with few exceptions).

- Straighten my teeth to a perfect arch :)

- DONE. My teeth are straight now. I’m still wearing last sets of aligners and then will need to wear retainers for some time, but the work is done!

- Further reduce coffee. Cold turkey is a real option this year.

- Had 2 months with 0 coffee intake (Jan, Feb), and many months with few coffees. By the end of the year I’m basically back to daily sonsumption. This means I’m a coffee addict.

- Build a strong disciplined morning routine, that includes among other things waking within 5 minutes of alarm going off, drinking full glass of water, short meditation and a full breakfast.

- No real discipline, though I do have some kind of routine. I stopped having an alarm. Yeap. I do not have any alarms set and this makes me feel great.

- Write 10 blog posts, of which at least 5 are of a technical content.

- 1. That’s a failure.

- Listen to/read 10 books, of which at least 5 are technical/career ones. (Harry Potter ones don’t count).

- Books done: “Time Smart” “Effective C++” “Effective Modern C++” (partially) “Change by Design” “The Manager’s Path” “The Body Keeps the Score” “Fifty Inventions That Shaped The Modern Economy” “Sapiens” “The Art of Thinking Clearly” “The great mental models: Vol 2” “The Ride of a Lifetime” “The Pragmatic Programmer” (relisten) “Finding Flow”

- Solve 100 leet code problems (min 10 hard; min 50 medium). Got to keep myself in a good shape, right?

- Solved 44 problems. Not much. (total lifetime solved 565)

- Deliberately work on loosing my eastern European accent for at least 10 hours. A course with a real teacher would be great.

- Failed.

- Create a monetizable content and earn 1$. This can be a course on programming, an e-book, a problem solving tutorial, anything really.

- Failed.

- Become fully proficient in one more programming laguage.

- DONE: Gained C/C++ readability badge at work. It kind of means Google now trusts me to write C++ code :) I also finally fill more-or-less comfortable in this language.

- Learn Linux. I use Linux every day at work, but I’m limited to basic things and have never deliberately tried to understand this operating system or become proficient in it.

- Failed. Linux is now my main non-work operating system. Though still not what I meant.

- Learn to complete all work within strickly 8 working hours. Less of stretching of time, less of inefficiencies, less of distruction, more discipline. Efficiency. Time is our most precious resource.

- Mar: March was probably one of the most efficient months for me at Google so far. Feb: I’m tracking my time super-precisely and can tell there is close to 0 of time-wasters (news, social, etc) during work hours. Oct: This goes “oke-ish”. Calling this a success even I don’t feel I work enough. My employer seems to be happy, though.

- Better track money. I’ve been tracking money since my first paychecks, but in the year 2020 I lost my routines so need to reestablish them.

- Mar: on track. Feb: Back on track. Rewamped my “balance and net worth” sheets. Oct: Not sharing too much but I have a hold on money tracking and investing. It is just maybe I can make more if, say, I worked in US or something.

- Make or lose 1000$ in a risky investment. Failures are learning experiences.

- DONE: I have some crypto ETFs that jumped like hell and I didn’t budge. Jumps were X*1000 so I’m covered for this goal. I have some confidence in risk tollerance now. And no, I do not believe in all this crypto hype.

- Invest 20% more money in 2021 than in 2020.

- DONE.

- Create a painting and/or a drawing. I already know some basics. Just want to do something to really like.

- Failed. I just didn’t feel like doing this for entire year. There is still tiny chance before 1 Jan I do this.

Same as last year, I will consider succeeding if I complete at least half of the items on this list.

To make sure I succeed this year again I will be tracking my progress each month in a spreadsheet (already prepared it) and posting occasional comments below this post, much like I did last year. I’m also tracking a couple personal goals I’m not too comfortable posting publicly.

Happy New Year!

Dear reader, what’s your plan for the year 2021? Do you have one? Share your plan and keep on! If any of you wants to run occasional challenges with me, just ping me. I’ve ran them in the past with few folks and though we failed to stick the outcome was noticeable progress for participants.

Happy New Year!

My Home Office Setup

October 18, 2020 Opinion, Personal, RandomThoughts 11 comments

First things first, this is not a “how-to” post explaining you the “one right way” of setting up your home office. I am a Software Engineer (you are likely to be one as well) and like yours, my home office setup is probably somewhere in between coding on sofa and science-fiction command center. In this post I’m just sharing what I’ve done to improve my home office situation and what I’m thinking might be worth to improve it even further. I will be glad to hear any feedback or advice you might have on this.

Location

Reducing noises, keeping kids from fighting for your immediate and undivided attention, avoiding kitchen temptations, dog barking or whatever else is applicable to you, might be one of the most important factors for your home office, yet it might be the one you have the least control of. I do not pretend to have a batcave for work from home either. In fact, I started working on sofa when COVID first hit in March and then quickly realized this wasn’t a good idea. Step by step I improved my WFH situation and currently have something reminiscent of an office. I’m renting a relatively large apartment, though it obviously wasn’t designed with a workspace in mind, so I’m using a bedroom. Yeah, a bedroom! I have rearranged the furniture in a way logically separating the room into office and non-office halves. In this post, I am going to show pictures of my office half. (Not too eager to post photos of my bed, especially when it is not made up :)).

Desk and equipment arrangement

I’m in favor of simplicity and minimalism when it comes to my desk. Even when I was going to the office I would usually keep it empty from anything non-essential. So here is my arrangement:

On the left you see my work laptop with two cables. First cable goes to a docking station serving as a power source and connecting the laptop to 300 Mbps internet (seems to be enough ¯\_(ツ)_/¯) as well as to external camera which you can see on the top of the laptop (I will touch on this again later on). Docking station has enough ports to add more peripherals. Second cable connects my work laptop to KVM. If you see, there is a small button in front of the keyboard – that’s KVM switch allowing me to share 34″ monitor and keyboard between laptops on the left and right. Laptop on the right is my personal laptop. I intentionally don’t login with my personal account on my work laptop for multiple reasons (will touch on this later as well). There is also a desk lamp and desk power source located in the right corner mostly for charging devices (2 USB-C, mini-USB, Garmin). I might keep few different sets of headphones on the desk, but usually prefer to keep them in the back of my “office”.

List of Equipment and details

Except of work laptop and few small things, all equipment is something I bought with my own money before I knew my new employer is so generous to give 1000$ to its employees to improve WFH situation [public info]. Below is the list of the equipment I’m using with links to Amazon for reference only – those are not referral links (I’m not trying to make money on your clicks, and this post is not written for that purpose).

Monitor: Dell UltraSharp U3415W 34-Inch Curved LED-Lit Monitor (Older Model) It is a nice wide curved 34″ monitor I can easily use as if I had two with tools like Spectacle on mac or built-in shortcuts on chomebook. The only regret I have is I should have probably went for newer version with more aggressive curve and USB-C support.

Monitor Stand: AmazonBasics Premium Single Monitor Stand This allows me to bring the monitor forward/backward, up/down or change the angle. This is so much better than having monitor placed stationary. It also allows to free up some space on the desk.

Keyboard: Filco Majestouch 2 Ninja Cherry MX Blue Switch 87 Key Mechanical Keyboard Black This is compact super-loud faceless mechanical keyboard. I love this keyboard and find it to be beautiful. It might be too loud to use if you are sharing space with others, so keep this in mind.

Mouse: Logitech® MX Anywhere 2S Wireless Mouse A nice precise mouse, though I often don’t like how it behaves on Chomebook. Not sure if this is an issue with the mouse itself or ChromeOS (and yes I tried to play with the settings).

Logitech MX Master 3. This is a full size and precise mouse. I am glad I replaced the old small one with this one.

Docking station: Dell WD15 Monitor Dock 4K with 130W Adapter, USB-C While docking station is definitely something to have I do NOT recommend this particular one as it is not fully compatible with newer Macs. My monitor flickers when I connect it via this docking station. Here is DELL’s support page on this. I’m using this station for power, internet connection, web-camera, and any further peripherals I will need to add to my work laptop.

KVM: Sabrent 2-Port USB Type-C KVM Switch with 60 Watt Power Delivery After much of extensive search I gave up looking for a docking station that has TWO usb-c power delivery outputs and also works as a KVM. This seems to be non-existent device. So instead, I bought myself a dedicated KVM. It is simple and it works. Power delivery is not always detected on my Pixelbook, so I skip PD option and use another cable to power my personal laptop on the right.

Power: BESTEK Power Strip with USB for charging devices, powering lamp, and occasional plug-in of things at top of my desk. I also use conventional power extender for low-power things under my desk and directly plug into the wall outlet more power demanding devices (monitor, docking station).

Desk Lamp: Swing Arm Lamp, LED Desk Lamp with Clamp, 9W Eye-Care Dimmable Light, Timer, Memory, 6 Color Modes, JolyJoy Modern Architect Table Lamp for Task Study Reading Working Home Dorm Office (Black) Super happy with this lamp – it can be bent in so many ways. I can recommend this one.

Vertical Laptop Stand: OMOTON Adjustable Something to hold my personal laptop vertically and save even more space. Anyway it is so much more pleasant to work on a large monitor (something I’m doing right now).

External Web Camera: Logitech C920S HD Pro Webcam with Privacy Shutter External webcam has its advantages of providing higher quality image with active focus. Unfortunately I probably need to add some swinging arm or a holder to it as right now I’m placing it exactly where laptop’s camera is and don’t think that my coworkers have even noticed a difference. I’m yet to test if mic is better on it in comparison to built-in one. Some people use dedicated external mics and their sound quality is noticeably better.

Backup power and internet connection: I also have two 20K+ mAh external power banks (one of which is enough to jump-start my car) and 20Gb of mobile internet plan. Based on my 4 hour testing this should take me through a full day of power outage. I know this is not ideal, but I’m also not running a data-center at home nor I have a detached house to buy myself generator or a similar solution. In fact, I do not remember unplanned power outage in my building since I live here and a planned one only lasted 4 hours.

Cables: Well… Everyone is ought to have some wire spaghetti under their desk. Right? I didn’t do anything special about the cables. Just tied some of them together so I don’t hit them with my feet.

Desk and chair: Those are cheap IKEA ones. I’m considering to change the legs of my desk to make it height-adjustable standing desk. As of chair, I don’t think mine is great for 8+ hours of sitting in it.

As of 2021 replaced the chair with Autonomous ErgoChairPro.

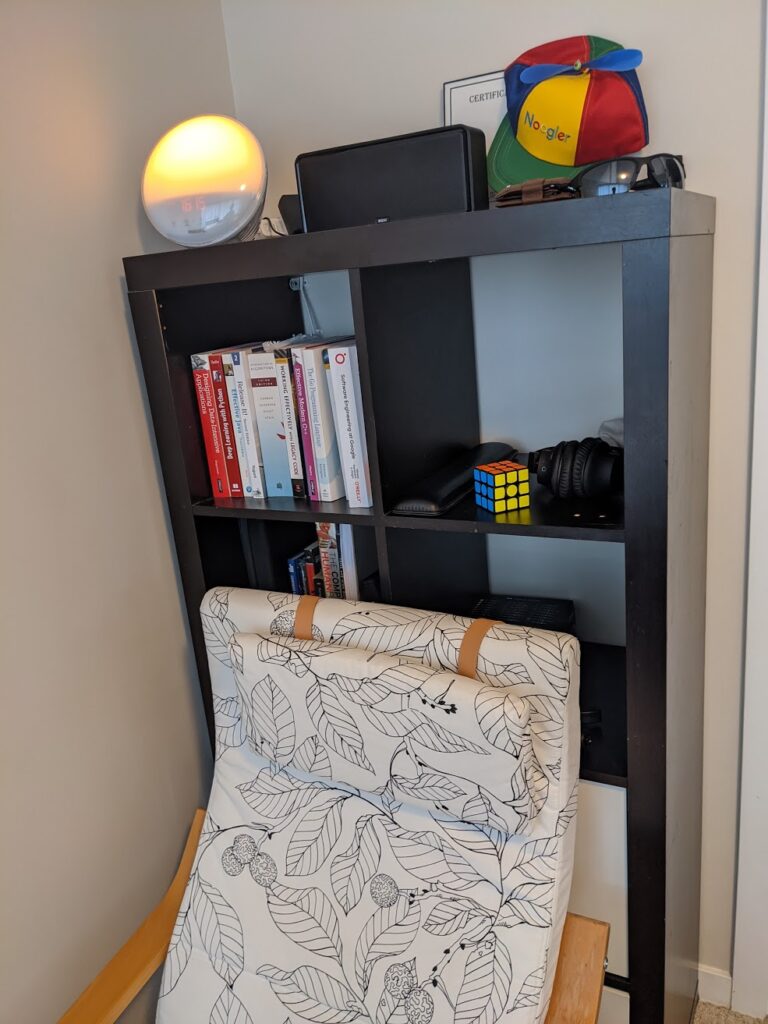

Ok, back of my “office”

To complete the picture of my office here is what I have just behind myself:

It is another IKEA chair for mental breaks and a bookshelf which has few books but mostly serves for accessing things I would use time-to-time, like keyboard palm-rest, other headphones, cables and few toys (no, I didn’t reassemble and then assemble the Rubik’s Cube – I can actually solve it). I’m also using the most expensive alarm I ever had which supposedly should help me wake up more naturally during winter months but doesn’t.

Last but not least, pull-up bar (BODYROX Premium Pull up/Chin up Bar) which I occasionally use during breaks. I consider it to be part of my home office as I wouldn’t buy it if I didn’t have to work from home.

No Personal things on Work Laptop

As mentioned above, I’m not logged in with my personal account on my work laptop – this means I cannot open personal gmail, facebook, instagram, twitter, favorite news site, whatever. When I actually need to do something personal I have to specifically log-in into my personal laptop, which means pressing KVM button. This small physical constraint and a clear separation not only helps to be fully compliant with whatever policies there are on the use of work laptop, but also helps to be totally focused on work. I find myself almost not switching to my personal laptop at all during working day (I take breaks, though). Additionally I blocked myself out of all of those distracting social media apps on my phone for working hours and limited their use to 15min/day/app with digital wellbing tools on my phone. I’m super happy about this (thank you, Google). In fact, this also helps me close item #23 on my new year’s resolution.

Your Feedback and Advice

I would be enormously happy to receive any advice from you on what I might be missing in my home office setup and what you would recommend. I understand our situations might be different but we are all in this together and if you have something to share please do so!

Running a Book Club

October 4, 2020 Opinion, RandomThoughts No comments

This is not a “how to” on running a book club. In fact I know next to nothing about book clubs and to run my first meet I googled what it takes to run such a thing. All of my previous stereotypes of book clubs were of bunch of super-boring people sitting in a circle and silently sipping tea (not coffee). After reading few articles I realized that I still know nothing about book clubs as apparently they could be run in myriad of different ways.

If you follow my blog you would notice that book reviews are one of very common blog posts here (55 of them), probably as common as technical blog posts and posts on success for software engineers. So no wonder I’m interested in reading and discussing books. One other aspect I’ve always been keen on is knowledge sharing. I used to share my knowledge via tech talks based on books and discussions called “design sessions”. It is not that I didn’t want to discuss books directly, but issue was that my audience in majority of cases didn’t read the book and still was interested in a given topic.

Last week I found myself in a situation when probably half of my team has already read or is reading one particular book. With a hint from my colleague, I kicked-off a book club. First meeting turned out to be a really great, fast paced discussion with a lots of engagement. I wasn’t the most active participant and this is awesome. Took notes and spoke only few times.

We went through highlights people remembered from the first chapter and then tried to analyze those, thought of practical applications, shared our related past experiences, and just had a good discussion. I would even call this to be some kind of a team building event – all virtual (in case you are reading this in future and COVID-19 is a thing of the past).

Once in 2012 I tried to organize “Code & Beer” and miserably failed with two people showing up. Arguably I just didn’t make it very clear I bought the beer for everyone :) This time people joined with no snacks but with tons of interest. So, how to run a book club? Who knows… Maybe make sure you have a “critical mass” of people interested in same book.

Book Review: Clean Architecture

September 20, 2020 Book Reviews No comments

Overall thoughts on the book

Although the book “Clean Architecture” is written by famous voice in software engineering, Robert C. Martin, and indeed has a lot of great advice it certainly did not meet my expectations. In my opinion the book is very outdated, is very focused on old ways of building software, namely monolithic and 3-layer applications for commercial enterprises. Yes there are touches on embedded software as well as on SOA and micro-services, but they seem to have been added as an afterthought. At the very least I didn’t get the feeling author had any first-hand experience with resent trends.

There were few instances were I had to stop and think for myself that I completely disagree with the author. For instance, in “The Decoupling Fallacy” chapter the author argues that services are anyway coupled because they use same data passed around and modifying interface of data requires changes in many places. Well it depends on how you design your services and example given in book looked very artificial – not something that you would see in real world implementations. Luckily it is also the point that we can redesign services to reduce this kind of coupling.