July 8, 2012 IoLanguage, Languages 6 comments

Io programming language

I would expect that most of you never heard about such programming language as Io. Very likely programmers would associate Io with “input-output”, but Io language has nothing special to do with it and has no cool in-out features.

Io is extremely tiny language which runs on supermall virtual machine, even your micro-oven can handle. Having that said I don’t mean that Io is not powerful.

Io is prototypical object-oriented language with dynamic typing. For me, as C# guy, it was not easy to “feel” the language. I could very quickly understand syntaxes and mechanisms of language. It will not take for you longer than 20 minutes to start writing simple code, either. I was understanding what I was trying out, but I didn’t feel myself comfortable with realizing that anything can be changed in this language on the fly which could affect all of the prototypes of changed object. Note that there is no difference between class and object, or better to say, there are no classes in Io. If you define method on some object it is ultimately inherited to the clones. It worth to mention that in Io every operation is message. “Hello world” application which would look like:

Should be understood like: “send message “println” to the string object”.

Here is bit more code (sheep example cloned from this post):

Sheep := Object clone Sheep legCount := 4 MutantSheep := Sheep clone MutantSheep legCount = 7 dolly := MutantSheep clone MutantSheep growMoreLegs := method(n, legCount = legCount + n) dolly growMoreLegs(2)

The coolest thing about Io is its concurrency model, at least I think so.

Io uses coroutines, actors, yielding and future stuff. If you don’t know what it is about. Here is a bit of explanation. Coroutines in Io are user threads, built on top of kernel threads, which allows for quick switching of contexts and more flexibility in general. For simplicity think about coroutines as about threads. Any object can be sent message asynchronously, by adding @ symbol before the message name. This message is then places in object’s message queue for processing. Object would hold coroutine (thread) for processing those messages. Objects holding coroutine are called Actors. Effectively any object in Io can become Actor. When you call some methods asynchronously, result can be saved into so called “future”, value of which is resolved before it is used. You can switch between actors using “yield”. To demonstrate this, I prepared this small piece of code, which prints numbers 0,1,2,3 one by one:

oddPrinter := Object clone evenPrinter := Object clone oddPrinter print := method(1 println; yield; 3 println; ) evenPrinter print := method(0 println; yield; 2 println; yield) oddPrinter @print; evenPrinter @print;

If you found this to be interesting I recommend you to read Io Guide. There you will find many other interesting features of the Io language, which I didn’t mention.

Here are some links:

-

Io language site: http://www.iolanguage.com/

-

A very nice introduction to Io: blame it on Io

-

Official Io Guide.

You may be wondering why I’m writing and learning something about old, small language, which even doesn’t have normal ecosystem.

I’m trying to learn more programming concepts and play with different languages. Recently I was even blamed for writing “enterprise-y code” and suggested to see how code looks like in other programming language communities. Well… of course it is valid and good suggestion, but not that I never thought about it. In my year plan post I mentioned that I would like to learn one more programming language and to start with something I picked up interesting book called “7 languages in 7 weeks”. So far I read and tried Ruby, Io and Prolog. Scala, Erlang, Clojure and Haskell are next in a row. After I’m done with book I will pick one language (not compulsory from list) and extend my knowledge in it. Of course there will be a review on the book.

Application Fabric Cache – an easy, but solid start

I would like to share some experiences of working with Microsoft AppFabric Cache for Windows Server.

AppFabricCache is distributed cache solution from Microsoft. It has very simple API and would take you 10-20 minutes to start playing with. As I worked with it for about one month I would say that product itself is very good, but probably not enough mature. There are couple of common problems and I would like to share those. But before let’s get started!

If distributed cache concept is something new for you, don’t be scary. It is quite simple. For example, you’ve got some data in your database, and you have web service on top of it which is frequently accessed. Under high load you would decide to run many web instances of your project to scale up. But at some point of time database will become your bottleneck, so as solution you will add caching mechanism on the back-end of those services. You would want same cached objects to be available on each of the web instances for that you might want to copy them to different servers for short latency & high availability. So that’s it, you came up with distributed cache solution. Instead of writing your own you can leverage one of the existing.

Install

You can easily download AppFabricCache from here. Or install it with Web Platform Installer.

Installation process is straight forward. If you installing it to just try, I wouldn’t even go for SQL server provider, but rather use XML provider and choose some local shared folder for it. (Provider is underlying persistent storage as far as I understand it.)

After installation you should get additional PowerShell console called “Caching Administration Windows PowerShell”.

So you can start your cache using: “Start-CacheCluster” command.

Alternatively you can install AppFabric Caching Amin Tool from CodePlex, which would allow you easily do lot of things though the UI. It will show PowerShell output, so you can learn commands from there as well.

Usually you would want to create named cache. I created NamedCacheForBlog, as can be seen above.

Simple Application

Let’s now create simple application. You would need to add couple of references:

Add some configuration to your app/web.config

<section name="dataCacheClient" type="Microsoft.ApplicationServer.Caching.DataCacheClientSection, Microsoft.ApplicationServer.Caching.Core" allowLocation="true" allowDefinition="Everywhere"/> <!-- and then somewhere in configuration... --> <dataCacheClient requestTimeout="5000" channelOpenTimeout="10000" maxConnectionsToServer="20"> <localCache isEnabled="true" sync="TimeoutBased" ttlValue="300" objectCount="10000"/> <hosts> <!--Local app fabric cache--> <host name="localhost" cachePort="22233"/> <!-- In real world it could be something like this: <host name="service1" cachePort="22233"/> <host name="service2" cachePort="22233"/> <host name="service3" cachePort="22233"/> --> </hosts> <transportProperties connectionBufferSize="131072" maxBufferPoolSize="268435456" maxBufferSize="134217728" maxOutputDelay="2" channelInitializationTimeout="60000" receiveTimeout="600000"/> </dataCacheClient>

Note, that above configuration is not the minimal one, but rather more realistic and sensible. If you are about to use AppFabric Cache in production I definitely recommend you to read this MSDN page carefully.

Now you need to get DataCache object and use it. Minimalistic, but wrong, way of doing it would be:

public DataCache GetDataCacheMinimalistic() { var factory = new DataCacheFactory(); return factory.GetCache("NamedCacheForBlog"); }

Above code would read configuration from config and return you DataCache object.

Using DataCache is extremely easy:

object blogPostGoesToCache; string blogPostId; dataCache.Add(blogPostId, blogPostGoesToCache); var blogPostFromCache = dataCache.Get(blogPostId); object updatedBlogPost; dataCache.Put(blogPostId, updatedBlogPost);

DataCache Wrapper/Utility

In real world you would probably write some wrapper over DataCache or create some Utility class. There are couple of reasons for this. First of all DataCacheFactory instance creation is very expensive, so it is better to keep one. Another obvious reason is much more flexibility over what you can do in case of failures and in general. And this is very important. Turns out that AppFabricCache is not extremely stable and can be easily impacted. One of the workarounds is to write some “re-try” mechanism, so if your wrapping method fails you retry (immediately or after X ms).

Here is how I would write initialization code:

private DataCacheFactory _dataCacheFactory; private DataCache _dataCache; private DataCache DataCache { get { if (_dataCache == null) { InitDataCache(); } return _dataCache; } set { _dataCache = value; } } private bool InitDataCache() { try { // We try to avoid creating many DataCacheFactory-ies if (_dataCacheFactory == null) { // Disable tracing to avoid informational/verbose messages DataCacheClientLogManager.ChangeLogLevel(TraceLevel.Off); // Use configuration from the application configuration file _dataCacheFactory = new DataCacheFactory(); } DataCache = _dataCacheFactory.GetCache("NamedCacheForBlog"); return true; } catch (DataCacheException) { _dataCache = null; throw; } }

DataCache property is not exposed, instead it is used in wrapping methods:

public void Put(string key, object value, TimeSpan ttl) { try { DataCache.Put(key, value, ttl); } catch (DataCacheException ex) { ReTryDataCacheOperation(() => DataCache.Put(key, value, ttl), ex); } }

ReTryDataCacheOperation performs retry logic I mentioned before:

private object ReTryDataCacheOperation(Func<object> dataCacheOperation, DataCacheException prevException) { try { // We add retry, as it may happen, // that AppFabric cache is temporary unavailable: // See: http://msdn.microsoft.com/en-us/library/ff637716.aspx // Maybe adding more checks like: prevException.ErrorCode == DataCacheErrorCode.RetryLater // This ensures that once we access prop DataCache, new client will be generated _dataCache = null; Thread.Sleep(100); var result = dataCacheOperation.Invoke(); //We can add some logging here, notifying that retry succeeded return result; } catch (DataCacheException) { _dataCache = null; throw; } }

You can go further and improve retry logic to allow for many retries and different intervals between retries and then put all that stuff into configuration.

RetryLater

So, why the hell all this retry logic is needed?

Well, when you open MSDN page for AppFabric Common Exceptions be sure RetryLater is the most common one. To know what exactly happened you need to verify ErrorCode.

So far I’ve see this sub-errors of the RetryLater:

There was a contention on the store. – This one is quite frequent one. Could happen when someone is playing some concurrent mess with cache. Problem is that any client can affect the whole cluster.

The connection was terminated, possibly due to server or network problems or serialized Object size is greater than MaxBufferSize on server. Result of the request is unknown. – This usually has nothing to do with object size. Even if configuration is correct and you save small objects you can still get this error. Retry mechanism is good for this one.

One or more specified cache servers are unavailable, which could be caused by busy network or servers. – Have no idea how frequent this one could be, but it can happen.

No specific SubStatus. – Amazing one!

Conclusion

AppFabricCache is very nice distributed cache solution from Microsoft. It has a lot of features. Of course not described here, as you can read it elsewhere, say here. But to be able to go live with it you should be ready for AppFabricCache not being extremely stable & reliable, so you better put some retry mechanisms in place.

To be honest if I was one to make decision if to use this dcache, I would go for another one. But who knows, maybe other are not much better… I’ve never tried other distributed caches.

Links

Thank you, and hope this is of some help.

OData service with WCF and data in memory

May 20, 2012 C#, OData, Web 3 comments

In previous blog post I briefly touched OData protocol by showing quick usage of OData feed and then implemented ever simple WCF data service with using Entity Framework.

Now, let’s imagine that we have some data in memory and would like to expose it. Is it hard or easy?

Querying in memory data

If you can represent your data as IQueryable then, for most cases, you are fine. There is an extension method to IEnumerable called AsQueryable, so as long as your data can be accessed as IEnumerable you can make it IQueryable.

Sample data kept in memory

Now, let’s create some sample data:

private IList<Sport> _sports = new List<Sport>(); private IList<League> _leagues = new List<League>(); private void Populate() { _sports = new List<Sport>(); _leagues = new List<League>(); _sports.Add(new Sport() { Id = 1, Name = "Swimming"}); _sports.Add(new Sport() { Id = 2, Name = "Skiing"}); var sport = new Sport() { Id = 3, Name = "Football"}; var league = new League() { Id = 1, Name = "EURO2012", Region = "Poland&Ukraine" }; sport.AddLeague(league); var league1 = new League() { Id = 2, Name = "UK Premier League", Region = "UK" }; sport.AddLeague(league1); _sports.Add(sport); var league2 = new League() { Id = 3, Name = "Austria Premier League", Region = "Austria" }; _leagues.Add(league); _leagues.Add(league1); _leagues.Add(league2); _sports.Add(new Sport() { Id = 4, Name = "Tennis"}); _sports.Add(new Sport() { Id = 5, Name = "Volleyball"}); }

Absolutely nothing smart or difficult there, but at least to give you an idea about dataset we have.

Few things to make it happen

To expose sports and leagues we would need to add public properties to our data service implementation like below:

public IQueryable<Sport> Sports { get { return _sports.AsQueryable(); } } public IQueryable<League> Leagues { get { return _leagues.AsQueryable(); } }

Another important thing about exposing data is that you have to indicate key for your entities with DataServiceKey attribute.

To make our service bit more realistic I’m going to add caching as well.

Complete source code of service

public class SportsWcfDataService : DataService<SportsData> { public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("*", EntitySetRights.AllRead); config.SetEntitySetAccessRule("*", EntitySetRights.AllRead); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; // To know if there are issues with your data model config.UseVerboseErrors = true; } protected override void HandleException(HandleExceptionArgs args) { // Put breakpoint here to see possible problems while accessing data base.HandleException(args); } protected override SportsData CreateDataSource() { return SportsData.Instance; } protected override void OnStartProcessingRequest(ProcessRequestArgs args) { base.OnStartProcessingRequest(args); var cache = HttpContext.Current.Response.Cache; cache.SetCacheability(HttpCacheability.ServerAndPrivate); cache.SetExpires(HttpContext.Current.Timestamp.AddSeconds(120)); cache.VaryByHeaders["Accept"] = true; cache.VaryByHeaders["Accept-Charset"] = true; cache.VaryByHeaders["Accept-Encoding"] = true; cache.VaryByParams["*"] = true; } } public class SportsData { static readonly SportsData _instance = new SportsData(); public static SportsData Instance { get { return _instance; } } private SportsData() { Populate(); } private void Populate() { // Population of data // Above in this post } private IList<Sport> _sports = new List<Sport>(); public IQueryable<Sport> Sports { get { return _sports.AsQueryable(); } } private IList<League> _leagues = new List<League>(); public IQueryable<League> Leagues { get { return _leagues.AsQueryable(); } } } [DataServiceKey("Id")] public class Sport { public int Id { get; set; } public string Name { get; set; } public void AddLeague(League league) { _leagues.Add(league); } private IList<League> _leagues = new List<League>(); public IEnumerable<League> Leagues { get { return _leagues; } } } [DataServiceKey("Id")] public class League { public int Id { get; set; } public string Name { get; set; } public string Region { get; set; } }

Some results of our work

With this URL http://localhost:49936/SportsService.svc/ service can be seen:

<service xml:base="http://localhost:49936/SportsService.svc/"> <workspace> <atom:title>Default</atom:title> <collection href="Sports"><atom:title>Sports</atom:title></collection> <collection href="Leagues"><atom:title>Leagues</atom:title></collection> </workspace> </service>

Now, you can access data via URL or by writing C# Linq queries if connected with client app or LinqPad. Following request:

http://localhost:49936/SportsService.svc/Leagues()?$filter=Name eq ‘EURO2012’&$select=Region

Would produce result containing this:

What if you need to edit data?

If you need your data to be updatable, your SportsData would need to implement System.Data.Services.IUpdatable interface.

What if your datasource is not just few collections in memory?

What if you have very special data source, or you cannot simply keep your data in memory like collections of some data? This could be tricky or very tricky, depending on your data source, of course. But anyway you would need to implement interface IQueryable by hand if not provided by your data source.

Here is step-by-step walkthrough on msdn. Only by size of article you could imagine it is not trivial task to do. But if you managed to do it you can be proud, because you can implement your own Linq provider. (Do you remember NHibernate introducing support of Linq? It was big feature for product, roughly all they did was implementation of IQueryably by their NhQueryable.)

Why do I learn OData?

First of all I have to investigate if my team can use it. (Depending on outcome you might see more posts on this topic from me.) And another reason is that it is very exciting topic, about which I knew so little.

Very disappointing to admit that I start to really understand things when I touch them for reason and that reading dozen of blog posts on topic is useless unless I do something meaningful related to matters in those posts.

So, my Dear Reader, if you are reading this post and have read my previous post, but never tried OData I would be really pleased if you invest 10-30 or more minutes of your time to play with OData.

ODATA

May 20, 2012 C#, OData, WCF, Web 3 comments

Let’s talk about OData.

At first glance

I would propose to start our OData journey with the best thing about it. Which is openness of data, easy of accessing and working with it. Client applications no longer need to depend on some specific service methods or formats if there is OData feed available. Consuming OData is simple and enjoyable.

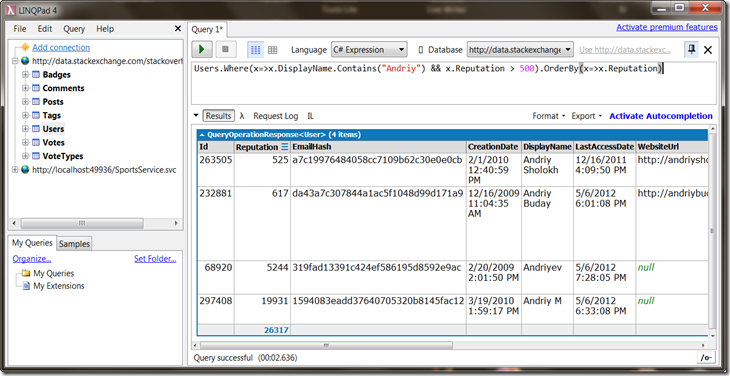

For example, I want to know how many users with name ‘Andriy’ are there on StackOverflow with reputation higher than 500. No one at StackOverflow would develop special method in API which would allow me to request exactly this data.

But as StackOverflow exposes OData feed we can connect to it with LinqPad (get it here) and simply write normal C# linq query, like this one:

You can see same data if you use URL below. This URL was built by LinqPad to request data:

(View page source if you don’t like how your browser rendered that feed).

So, no magic. You just build special URL and get your data of interest in preferred format. You can use wide set of libraries both for client and server to implement and use OData.

Whenever you see this icon ![]() it is good indication that there is OData feed available. There are many applications/web sites that already utilize this protocol. Do you use Nuget? It works through OData. Know ebay? They expose its catalog via OData. Need more examples? Go to ecosystem page of OData.

it is good indication that there is OData feed available. There are many applications/web sites that already utilize this protocol. Do you use Nuget? It works through OData. Know ebay? They expose its catalog via OData. Need more examples? Go to ecosystem page of OData.

So what is OData?

The Open Data Protocol (OData) is a Web protocol for querying and updating data that provides a way to unlock your data and free it from silos that exist in applications today. OData does this by applying and building upon Web technologies such as HTTP, Atom Publishing Protocol (AtomPub) and JSON to provide access to information from a variety of applications, services, and stores. The protocol emerged from experiences implementing AtomPub clients and servers in a variety of products over the past several years. OData is being used to expose and access information from a variety of sources including, but not limited to, relational databases, file systems, content management systems and traditional Web sites.

…from http://www.odata.org/

Continue your reading about OData on its documentation page here.

If you have time, I would recommend to watch this “OData: There’s Feed for That” MIX10 video.

Let’s build our first OData service

As OData was initially introduced by Microsoft no wonder it is extremely easy to put it in place when you are on MS stack of technologies. If you are using EF there is almost nothing you have to do to make it happen.

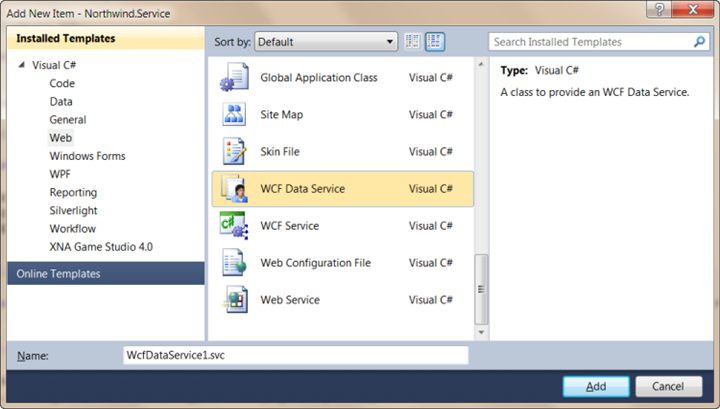

Add “WCF Data Service” to your project.

You will get following code:

public class WcfDataService1 : DataService< /* TODO: put your data source class name here */ > { // This method is called only once to initialize service-wide policies. public static void InitializeService(DataServiceConfiguration config) { // TODO: set rules to indicate which entity sets and service operations are visible, updatable, etc. // Examples: // config.SetEntitySetAccessRule("MyEntityset", EntitySetRights.AllRead); // config.SetServiceOperationAccessRule("MyServiceOperation", ServiceOperationRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; } }

Assuming that you have your data model generated on Northwind db. All you would need is something like this:

public class WcfDataService1 : DataService<NorthwindContext> { public static void InitializeService(DataServiceConfiguration config) { config.SetEntitySetAccessRule("*", EntitySetRights.All); config.SetServiceOperationAccessRule("*", ServiceOperationRights.All); config.DataServiceBehavior.MaxProtocolVersion = DataServiceProtocolVersion.V2; } protected override NorthwindContext CreateDataSource() { return new NorthwindContext(ConfigurationManager.ConnectionStrings["NorthwindContext.EF.MsSql"].ConnectionString); } }

And now clients can do whatever they like with your data. Of course, you can restrict them as you wish. OData is not about putting your database into web, you can control what you expose and to which extent. Also you can play with your service by adding caching, intercepting queries, changing behaviors and much-much more.

In next post I will show how we can build OData service for custom data you keep in memory.

In meantime you can checkout another video from NDC2011 by Vagif Abilov. Video is called “Practical OData with and without Entity Framework”. Follow this link to direct mp4 video file.

Book Review: “The Mythical Man-Month: Essays on Software Engineering”

May 7, 2012 Book Reviews 2 comments

For good reasons many people recommend book “The Mythical Man-Month: Essays on Software Engineering” when it comes to management of software projects. Reason is that it is one of the classical books on this matter. Recently I was also recommended this book. After reading it I realized that I grasped no new ideas or things that I did not know before. It is not because book is not good enough, but rather because it is very old and many other publications, I’ve read, have provided me with lots of up-to-date information. Not to mention, that many of these publications have been influenced by exactly this book.

For good reasons many people recommend book “The Mythical Man-Month: Essays on Software Engineering” when it comes to management of software projects. Reason is that it is one of the classical books on this matter. Recently I was also recommended this book. After reading it I realized that I grasped no new ideas or things that I did not know before. It is not because book is not good enough, but rather because it is very old and many other publications, I’ve read, have provided me with lots of up-to-date information. Not to mention, that many of these publications have been influenced by exactly this book.

Same was true for other person flying in same plane with Brooks, author of book:

The plane droned through the night toward LaGuardia. Clouds and darkness veiled all interesting sights. The document I was studying was pedestrian. I was not, however, bored. The stranger sitting next to me was reading The Mythical Man-Month, and I was waiting to see if by word or sign he would react. Finally as we taxied toward the gate, I could wait no longer:

“How is that book? Do you recommend it?”

“Hmph! Nothing in it I didn’t know already.” I decided not to introduce myself.

On the other hand, I got some insights into how software development looked like back in 70th.

Yes, I spelled it right. Book survived many years, mainly “because building things, including software, has always been as much about people as it has been about materials or technology – and people don’t change much in only 25 [35] years.”

I think that the strongest generalization from the book is this one:

“Assigning more programmers to a project running behind schedule, may make it even more late.”

To the topic, but not that much related. In book there is mentioning of Conway’s Law, which could sound like this:

“Organizations which design systems are constrained to produce systems which are copies of the communication structures of these organizations.”

The other day I came across nice article called “Seeing the team in the code”. As per me lack of opened and frequent technical communication between developers, like code reviews, could make source code not really what team wants it to be. On the other hand, it could help make code less coupled, if everything is made by contract. Conway’s law sounds like fun, but each joke is based on truth behind. There is even Microsoft research paper on this: http://research.microsoft.com/pubs/70535/tr-2008-11.pdf. (Not easy to read or to follow.)

I would recommend this book in two cases: you have little or no clue of how development and management of big software projects is done OR if you want to just make sure you are familiar with this work to have more coherent view on the topic. In case you are average developer, who frequently reads recent books or posts on management/leadership/etc in software it is very likely that you will not enjoy this book. I also did not enjoy it very much, I probably fall into case number 2.

Thanks for reading…

Існує die Frage of Language. Или нет?

April 23, 2012 Languages, Opinion 6 comments

Title of the post is complete bizarre(*). It consists of words out of 4 languages I have to deal with now.

Before I moved to Austria I mostly used Ukrainian. Of course, many meetings at work were in English and all mailing was held in English. Not to mention, there was some interaction with Russian, but not much. At least there were no real need to speak it. Now everything has shifted. I knew that I will have to deal with English everyday, I also knew that I will need some basic German. What I did not know is that there will be many guys from Ukraine and Russia at work and I will use Ukrainian and also Russian for small talks in kitchen or at lunch or for one-to-one discussions.

I continue to speak Ukrainian at home with my wife. We try to use English/German phrases. My wife is not good in English, instead her German is at intermediate level, so we try to exchange some knowledge in languages. But you know what? Unless someone or something kicks you in the ass, you won’t take learning of language seriously.

So, I paid 290 euro to have someone kicking me each day for 2 hours during whole month. Normally it is called language course. After one week I can introduce myself and provide brief information about myself, I can count and ask basic questions, I already know some colors, week days, months, restaurant words, etc.

It worth to mention, that you really need some pressure to start learning German in Austria, because all people around speak English very good and if you are lazy you can simply avoid conversations in German. Plus everything here could be done via internet or though automatic devices, so not much human interaction during the day.

I’m afraid for foreigners coming to Ukraine for EURO 2012. On average people don’t speak English in Ukraine. It is pity and shame for me.

Now back to German language courses. As I mentioned, I’m attending intensive evening courses for total beginners. I allocate myself 1 hour before class to do my home work. So in total it is 3 hours of German per day. My group is rather small – only 4 people, me and one girl from Ukraine, lady from Kazakhstan and another girl from Iran. If there are some explanations needed they are provided in German, if not understood in Russian or English (only girl from Iran doesn’t understand Russian). Another very interesting thing is that, as school is concentrated in Russian/German, teacher is not extremely good in English. Thus I often help to explain things to girl from Iran who is proficient in English. For me it is great – I hear explanations twice: in English and Russian.

To your surprise there are many words which sound similar to English and some are similar to Russian and Ukrainian words (or probably otherwise). Germany/Austria geographically are located between Great Britain and Russia/Ukraine so it could be understood without reading dozen wiki pages on language families, branches and their roots. Again, good for me.

Nevertheless, I have this question: “Is German important language anyway?” Accordingly to wiki there are about 100 millions German speakers in the world, so 12th place by number of speakers, but apparently it is number one in Europe where I live now. It is highly developed language, it is also language of technology (after English of course). All these sound great and everyone would answer that German is important language for Europe, especially if you already know English. So would I. In short term it makes sense to learn German. But in centuries world will dramatically shift to English, if not Chinese.

This all makes me think about importance of languages, their meaning for me and their value for world. Imagine there are no other languages, but just one, no matter which, how much would world be easier? The most importantly, how much further would we develop? Would we already start to colonize Mars? Or would it have opposite effect? Accordingly to Darwin there should be some deviation, otherwise no evolution could be progressing. All these are very philosophical questions and suitable for beer evening, or… for Friday snaps evening.

To conclude, I’m very proud to realize I will understand almost 1 billion people in the world after I learn German (precisely, 902 millions as per wiki).

I have some questions for you:

-

What languages do you know?

-

Do you learn any?

-

Do you think English is number one language and there is no sense to learn and develop other languages?

-

Would you learn German if you were me?

-

Do you think it is possible to be high in IT/Software industry for not native speakers of English?

Thank you!

P.S. Hope this was good reading. If not, please let me know. I’m willing to improve my blogging skills to write posts of higher quality. All for you.

(*) In English it would be “There is question of Language. Or not?”.

100% Code Coverage – real and good!

April 18, 2012 Opinion, UnitTesting No comments

I’m not going to write a long post discussing advantages and disadvantages of high code coverage. There are hundreds if not thousands of such posts out there and in the end almost all of them conclude that high code coverage in general is nice but not always justifiable, one of main reasons being redundant abstractions in favor of higher coverage. Here are my recent thoughts.

Achieve 100% Code Coverage by all means

It may sound crazy and not doable at all(*) or may have side effects if misused. I suggest very simple techniques to achieve high code coverage the right way:

Don’t be lazy. Recently I worked on a project and I already had 95% coverage. If I haven’t decided to increase coverage further I wouldn’t have found one missing mapping for a property. I maybe spent couple of hours to write more tests, instead of days of devs/testers/managers time to work around the bug. And in case of finding this in production it would cost real money for the company.

Work around external dependencies you really cannot test. Isolate them as much as you can and simply exclude from coverage report. I don’t think this is cheating. It is the best you can do, plus you do it explicitly. And, of course, you should have integration tests to test external dependencies.

Remember the Single Responsibility. Well… and few more things. You will be amazed how much code is simpler and easier to read if you just keep following SOLID. I think that developer should be able to clearly describe responsibility of a single class within one sentence.

Start with testing in mind, not with coverage number. It is vital to keep in mind that tests are intended to ensure you code works as designed and without defects, tests are NOT intended for high coverage numbers, which can be shown to boss. Thus always have tests to cover more important and sensitive code at first and only then move towards covering less important or easy to test code.

Refactor! Never write code you don’t like. It is fine to hate it the next day, but not at the moment when you are writing it. Usually crap code starts to appear when you try to add functionality which was not planned before. You must refactor constantly (same is applicable for your unit tests). Keep everything in synch.

Be a 100% good programmer. Don’t spoil yourself with 80% coverage or with just 60%. If someone says you are 75% good programmer, would you like it? Well, it is high number, isn’t it? I was worse developer few months ago than I’m today. Year ago I would disagree with today’s myself. High coverage, if used right, means that you know that your code works and that it is readable/refactorable/decoupled/structured/… and most of all – it is highly maintainable.

I hope my opinion sounds sensible!

Till next time…

Further reading:

-

I strongly recommend to read this paper: “How to Misuse Code Coverage”

-

There are research papers on this matter. In “Experiments of the effectiveness of dataflow and control flow-based test adequacy criteria” authors “evaluate all-edges and alluses coverage criteria using an experiment with 130 fault seeded versions of seven programs and observed that test sets achieving coverage levels over 90% usually showed significantly better fault detection than randomly chosen test sets of the same size. In addition, significant improvements in the effectiveness of coverage-based tests usually occurred as coverage increased from 90% to 100%.” – from MS research paper.

(*) I could agree with many exceptional situations you are thinking about. I would agree that with old systems it is difficult to do what I ask you to, I would also agree that if there are deadlines it is hard to stand against. There are bad programmers around, bad decisions taken and many other conditions. In the end it is your job to do the job right. And if you cannot, change the company or change the company.

WCF Session, Service Instance and Threads Allocation

April 16, 2012 WCF 2 comments

I would like to quickly mention how threads are used while running instance of WCF service. Don’t bother if you are WCF guru!

So quick and deep into WCF

When using PerSession, once client did first call, instance of service implementation will be kept on server. Every client has it’s own session executed only on one thread at one point of time (thread is taken from ThreadPool). So, one client cannot run concurrent calls within one session. But you can change ConcurrencyMode to make this happen. In case of PerCall service instance will be disposed immediately after call is done.

ThreadPool uses available thread to run your service code. But if you start 1000 concurrent clients ThreadPool will allocate many threads, which involves resources, such as memory. So keep this in mind when designing scalable applications. PerCall is best choice for highly scalable services.

Calculator example, one we all like

I created simple Calculator service to show how treads are used by WCF service.

[ServiceBehavior(InstanceContextMode = InstanceContextMode.PerSession)] public class CalculatorService : ICalculatorService { private int _threadIdOnCreating = Thread.CurrentThread.ManagedThreadId; public int AccumulatedValue { get; private set; } public int Accumulate(int valueToAdd) { AccumulatedValue += valueToAdd; Console.WriteLine(string.Format( "Accumulated: {0}. ThreadIdOnServiceCreating:{1} CurrentThreadId:{2}", AccumulatedValue, _threadIdOnCreating, Thread.CurrentThread.ManagedThreadId)); return AccumulatedValue; } }

Calculator is very simple it allows to accumulate some value within session and also it logs some interesting threading information – id of service instance creator thread and id of current thread within method.

Output

I ran Accumulate method with parameter 2 for five times and then created new client proxy and did the same. Below is output, which proves that server keeps instance of service implementation (proof is threadId on creation), but methods are ran on different threads, taken from ThreadPool (proof is threadId on run).

I'm calculator

Accumulated: 2. ThreadIdOnServiceCreating:6 CurrentThreadId:6

Accumulated: 4. ThreadIdOnServiceCreating:6 CurrentThreadId:7

Accumulated: 6. ThreadIdOnServiceCreating:6 CurrentThreadId:6

Accumulated: 8. ThreadIdOnServiceCreating:6 CurrentThreadId:7

Accumulated: 10. ThreadIdOnServiceCreating:6 CurrentThreadId:6

Accumulated: 2. ThreadIdOnServiceCreating:9 CurrentThreadId:9

Accumulated: 4. ThreadIdOnServiceCreating:9 CurrentThreadId:6

Accumulated: 6. ThreadIdOnServiceCreating:9 CurrentThreadId:9

Accumulated: 8. ThreadIdOnServiceCreating:9 CurrentThreadId:6

Accumulated: 10. ThreadIdOnServiceCreating:9 CurrentThreadId:8Hope this was interesting. Also continue your reading on instance/session management of WCF at msdn pages here if you would like to. Or read another short blog post my me.

BTW, this is just reposting of one of my answers at stackoverflow, but I thought it worth to have it here.

Book Review: “First Things First” and surrounding thoughts

April 15, 2012 Book Reviews, Success No comments

I like encouraging books. I like to be inspired by ideas that make us think we can do something to improve our lives. Despite, we all know that to change something we have to work extremely hard, usually after few attempts we give up, but never want to admit this. This is how we are built – we want to be better for less efforts.

Book “First Things First” is very good because it, unlike many other time-management books, brings to the table more dramatic and global questions of our path and purpose. Start with this: “How many people on their deathbed wish they’d spent more time at the office?”. Of course people work to make their living, but apparently working hard at work cannot be life goal itself. The goal could be to contribute to society and to your profession. I’m software developer and my goal could be to contribute to community of other guys, similar to me. Instead I catch myself on being hard-worker. I stay longer than others at work. In the end this only transforms me into Dark Matter Developer. I may get noticed at work and get better income, so I buy myself bmw z4 in few years. But, what’s the point? No happiness at home, no recognition in world of software developers and small but fast car for no kids. It is what you do, not what you own.

I never was lucky to truly embrace lot of time-management techniques. Maybe they are too difficult and take much time to get them rolling. Or maybe they are just wrong? They teach us to be effective and efficient, but usually we solve only short-term goals. Are you sure you are heading “the true north”?

From this book I will take & try one simple and great technique and would recommend you to try. Think about one thing for your personal life and one for your professional life, which would definitely improve quality of your life if you do them constantly and coherently. Ok? Just do them! Don’t concentrate on any other things… work on these two.

I have two for myself: Personal: exercise every morning. Professional: frequently blog quality posts. Though, none of them transparently represent how some aspects of life can be improved, believe me or not, I see great breakthrough, if I only do them.

Almost forgot, this should have been book review:

And it is! Thoughts provoked by book are great indicator of its quality.

Book worth to be read!

You may find my thoughts on other books I read by Book Reviews tag, many of them are also time/self-management and success oriented.

Autofac: resolve dependencies later in code & pass your parameters

March 22, 2012 .NET, Autofac, C#, IoC 3 comments

builder

.Register(b => new F())

.As<IF>();

builder

.Register(b => new E())

.As<IE>();

builder

.Register(b => new D1())

.Named<ID>(SomeD1Name);

builder

.Register(b => new D2())

.Named<ID>(SomeD2Name);

builder

.Register((b,prms) => new C(b.Resolve<IE>(), prms.TypedAs<IF>()))

.Named<IC>(CName);

builder

.Register((b,prms) => new B(b.Resolve<IF>(), b.ResolveNamed<IC>(CName, prms)))

.As<IB>();

builder

.Register(b => new A(b.Resolve<IE>(),

b.ResolveNamed<ID>(SomeD1Name),

b.ResolveNamed<ID>(SomeD2Name)))

.As<IA>(AName);

builder

.Register(b => new Service(b.Resolve<IComponentContext>(),

b.Resolve<IA>(), b.Resolve<IB>()))

.As<IService>();And that’s it.